2024-10-23 13:44:00

quansight.com

This project was a mix of challenges and learning as I navigated the CPython C API and worked closely with the NumPy community. I want to share a behind-the-scenes look at my work on introducing a new string DType in NumPy 2.0, mostly drawn from a recent talk I gave at SciPy. In this post, I’ll walk you through the technical process, key design decisions, and the ups and downs I faced. Plus, you’ll find tips on tackling mental blocks and insights into becoming a maintainer.

By the end, I hope you’re going to have the answers to these questions:

- What was wrong with NumPy string arrays before NumPy 2.0, and why did they need to be fixed?

- How did the community fund the work that fixed it?

- How did I become a NumPy maintainer in the midst of that?

- How did I start working on the project that helped fix NumPy strings?

- What cool new feature did I add to NumPy?

A Brief History of Strings in NumPy

First, I’ll start with a brief history of strings in NumPy to explain how strings worked before NumPy 2.0 and why it was a little bit broken.

String Arrays in Python 2

Let’s go back to Python 2.7 and look at how strings worked in NumPy before the Python 3 Unicode revolution. I actually compiled Python 2 in 2024 to make this post. It doesn’t build on my ARM Mac, but it does compile on Ubuntu 22.04. Python 2 "strings" were what we now call byte strings in Python 3 – arrays of arbitrary bytes with no attached encoding. NumPy string arrays had similar behavior.

Python 2.7.18 (default, Jul 1 2024, 10:27:04)

>>> import numpy as np

>>> np.array(["hello", "world"])

array(['hello', 'world'], dtype="|S5")

>>> np.array(['hello', '☃'])

array(['hello', '\xe2\x98\x83'], dtype="|S5")

Let’s say you create an array with the contents “hello", "world”, you can see it gets created with the DType “S5”. So, what does that mean? It means it’s a Python 2-string array with five elements, five characters, or five bytes per array (characters and bytes are the same thing in Python 2).

It sort of works with Unicode if you squint at it. For instance, I wrote 'hello', ‘☃’ and if you happen to know the UTF-8 bytes for Unicode 'snowman', it’s '\xe2\x98\x83'. So, it’s just taking the UTF-8 bytes from my terminal and putting them straight into the array.

![Diagram illustrating the memory layout of a NumPy string array. It shows two elements in the array: the first element contains the characters 'h', 'e', 'l', 'l', and 'o', and the second element contains encoded bytes represented as '\xe2', '\x98', '\x83', followed by two null bytes 'b\x00'. The array elements are labeled as 'arr[0]' and 'arr[1]'.](https://quansight.com/wp-content/uploads/2024/10/image1.png)

Here, we have the bytes in the Python 2 string array: the ASCII byte for 'h', the ASCII byte for 'e', and over in the second element of the array is the UTF-8 bytes for the Unicode snowman. It’s also important to know that for these fixed-width strings—if you don’t fill up the width of the array, it just adds zeros to the end, which are null bytes.

>>> arr = np.array([u'hello', u'world'])

>>> arr

array([u'hello', u'world'], dtype="<u5>

</u5>

Python 2 also had this Unicode type, where you could create an array with the contents 'hello', 'world', but as Unicode strings, and that creates an array with the DType 'U5'. This works, and it’s exactly what Python 2 did with Unicode strings. Each character is a UTF-32 encoded character, so four bytes per character

![Diagram showing the memory layout of a NumPy string array using UTF-32 encoding. It displays two elements: 'arr[0]' contains the characters 'h', 'e', 'l', 'l', 'o', and 'arr[1]' contains the characters 'w', 'o', 'r', 'l', 'd'. Each character is represented with a prefix 'u' indicating Unicode representation. A detailed inset illustrates the UTF-32 encoding for the character 'h', represented as 'u'h'' with its corresponding byte sequence 'b'h\x00\x00\x00'.](https://quansight.com/wp-content/uploads/2024/10/image2.png)

String Arrays in Python 3

>>> arr = np.array(['hello', 'world'])

>>> arr

array(['hello', 'world'], dtype="<u5>

</u5>

In Python 3, they made this the default since Python 3 strings are Unicode strings, and that was the pragmatic, easy decision, but I argue it was a bad decision—and here’s why:

>>> arr.tobytes()

b'h\x00\x00\x00e\x00\x00\x00l\x00\x00\x00l\x00\x00\x00o\x00\x00\x00w\x00\x00\x00o\x00\x00\x00r\x00\x00\x00l\x00\x00\x00d\x00\x00\x00'

If we look at the bytes actually in the array, these are all ASCII characters, so they really only need one byte, which means there’s a bunch of zeros in the array that are just wasted. You’re using four times as much memory than is actually needed to store the array.

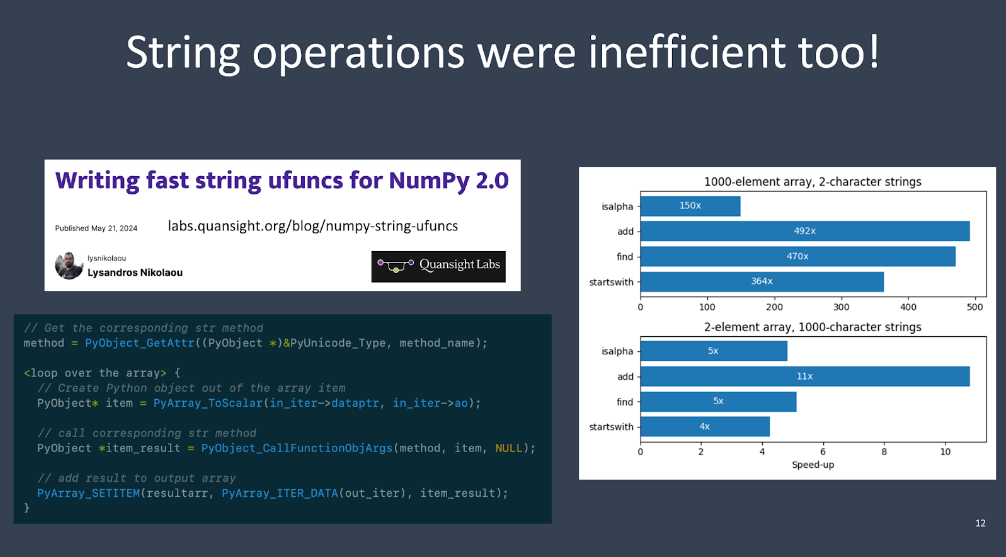

Before, it was written in C as a Python for-loop over the elements of the array. For each element of the array, it would create a scalar, call the string operation on that scalar, and then stuff the results into the result array. As you can imagine, that’s pretty slow. But by rewriting it to loop over the array buffer without accessing each item as a scalar, you can make it anywhere from 500 times faster for small two-element arrays or two to five times faster for longer strings

Another thing people have done, and what they’ve defaulted to because of these issues with Unicode strings in NumPy, is to use object arrays.

>>> arr = np.array(

['this is a very long string', np.nan, 'another string'],

dtype=object

)

>>> arr

array(['this is a very long string', nan, 'another string'],

dtype=object)

You can create an array in NumPy with dtype=object and it stores the Python strings and Python objects that you put into the array directly. These are references to Python objects. If we call np.isnan on the second element of the array, you get back np.True_ because the object is np.nan, and the other elements are python strings stored directly in the array.

>>> arr = np.array(

['this is a very long string', np.nan, 'another string'],

dtype=object

)

>>> np.isnan(arr[1])

np.True_

>>> type(arr[0])

str

Support Techcratic

If you find value in Techcratic’s insights and articles, consider supporting us with Bitcoin. Your support helps me, as a solo operator, continue delivering high-quality content while managing all the technical aspects, from server maintenance to blog writing, future updates, and improvements. Support Innovation! Thank you.

Bitcoin Address:

bc1qlszw7elx2qahjwvaryh0tkgg8y68enw30gpvge

Please verify this address before sending funds.

Bitcoin QR Code

Simply scan the QR code below to support Techcratic.

Please read the Privacy and Security Disclaimer on how Techcratic handles your support.

Disclaimer: As an Amazon Associate, Techcratic may earn from qualifying purchases.

![Pioneer [Blu-ray]](https://techcratic.com/wp-content/uploads/2024/11/81EmJG9mivL._SL1500_-360x180.jpg)