2024-12-05 19:31:00

bit.kevinslin.com

On a rainy March day in 1538, Thomas Howard, the Duke of Norfolk, found himself confined within the cold stone walls of his grand estate. His fingers reluctantly penned a letter to sell his cherished lands to settle longstanding debts.

“A man can not have his cake and eat his cake”, he wrote, the words etched with the pain of having to confront a reality that he did not want to accept.

This was the earliest written record of the proverb “you can’t have your cake and eat it too”. It’s used in situations when we are forced to make tradeoffs that we’d rather not make.

When it comes to logging, that tradeoff is one of volume vs cost. You want the volume necessary to observe all your systems and you want to not spend most of your infra budget on logs. But you often can’t have both.

The common choice companies make when logging costs are high is to log less. It lowers the cost and also reduces the volume.

This could be deciding that you only need ERROR logs in production. Or deciding that certain services can do without logs at all. Or only keeping logs on the host and hoping it doesn’t crash.

While this can reduce costs, it’s often a temporary bandage, one with very real downsides.

The reason companies log in the first place is because logs are insurance. In the happy case, no one thinks about it (besides the CFO griping about the bill). In the other case, the one where things are on fire, logs are essential for engineers to properly diagnose and recover from an incident.

Incidents result in downtime and downtime impacts the business’s bottom line. When Amazon went down for 40min in August 2013, a Forbes article estimated that Amazon lost $66,240 per minute during the outage

Dropping logs does decrease your costs. But you need to balance these savings with additional costs incurred by every extra second of downtime.

What if you didn’t need to choose? What if you could store all your logs while lowering your costs? Enter Lossless Log Aggregation (LLA).

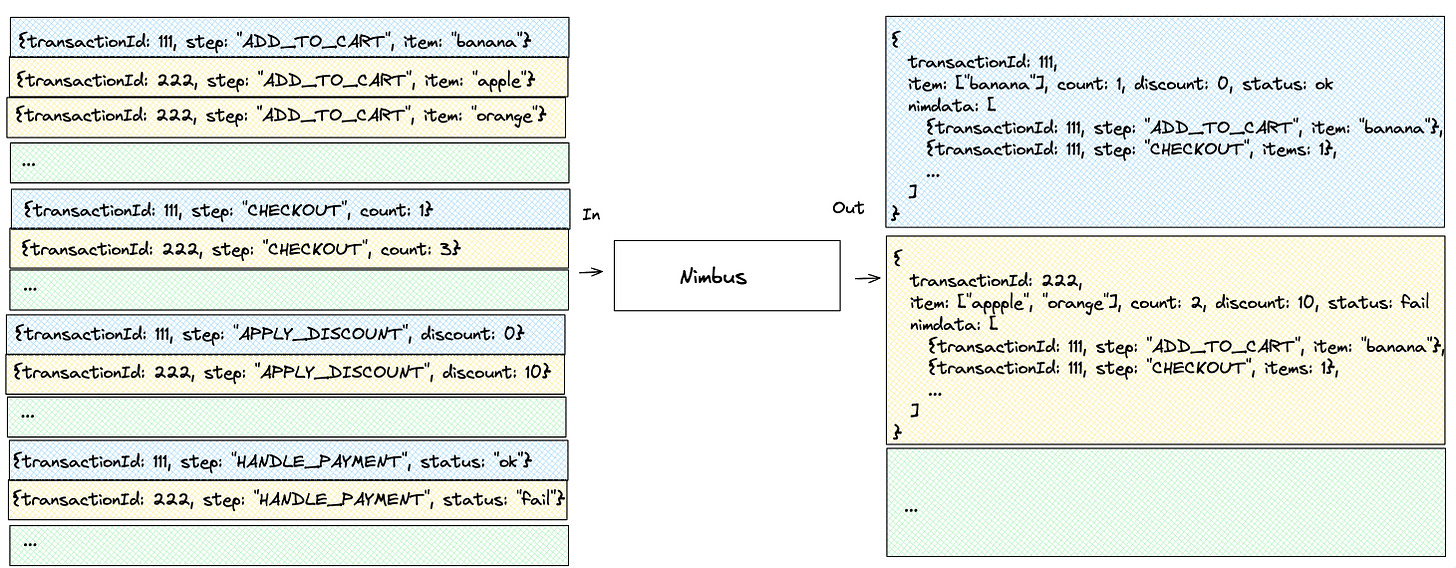

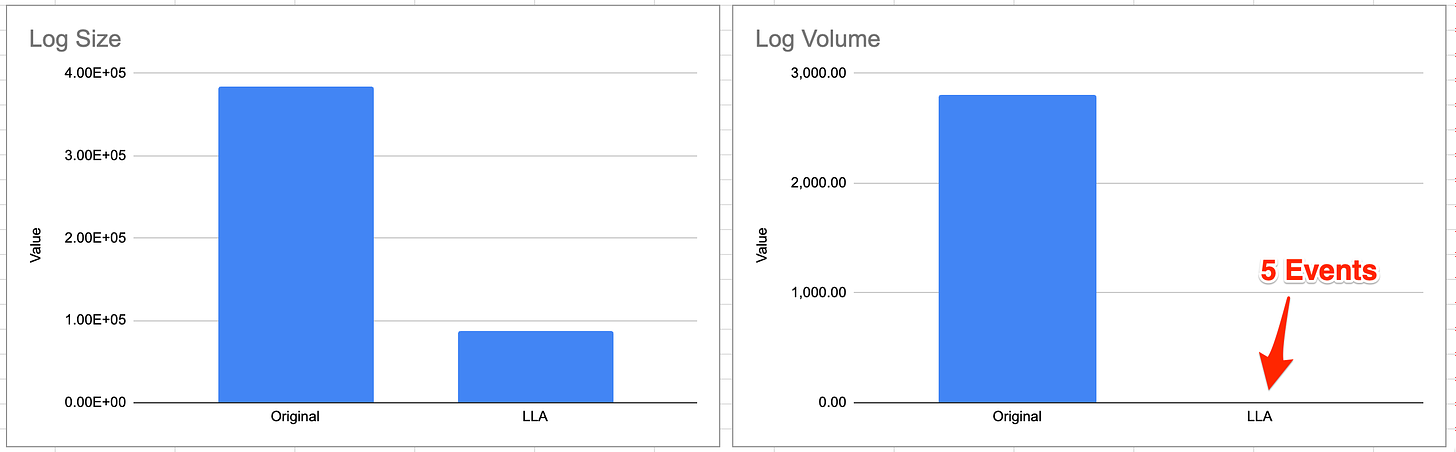

LLA is the process of aggregating similar logs into a larger aggregate log. Common metadata and values are deduplicated and merged during the aggregation. When done effectively, this can result in a 100X reduction in volume and a 40% reduction in size. Without dropping data.

Illustration of LLA

Three types of log groups are good candidates for LLA:

-

Logs with common message patterns

-

Logs with common identifiers

-

Multi-line Logs

To see before and after examples of each type, see the examples section of the Nimbus Documentation.

These are high-volume log events that repeat most of their content. For most applications most of the time, this will be the primary driver of log volume. Examples include health checks and heartbeat notifications.

These are logs that describe a sequence of related events. These sequences usually have some sort of common identifier like a transactionId or a jobId. Examples include a background job and business-specific user flows.

These are logs where the message body can be spread across multiple new lines. Unless you add special logic on the agent side, the default behavior is to emit each newline delimited message as a separate log event.

Below are logs from a load balancer performing health checks on a suite of targets.

{"timestamp":"Tue Jan 10 09:15:16 2023","host":"lb1.nimbus.dev:5678","target":"web1","path":"/health","latency":"23ms","status":"passed"}

{"timestamp":"Tue Jan 10 09:15:18 2023","host":"lb2.nimbus.dev:5678","target":"web2","path":"/health","latency":"57ms","status":"passed"}

{"timestamp":"Tue Jan 10 09:15:25 2023","host":"lb1.nimbus.dev:5678","target":"web4","path":"/health","latency":"14ms","status":"passed"}

{"timestamp":"Tue Jan 10 09:15:28 2023","host":"lb2.nimbus.dev:5678","target":"web5","path":"/health","latency":"38ms","status":"passed"}

{"timestamp":"Tue Jan 10 09:16:01 2023","host":"lb3.nimbus.dev:5678","target":"web3","path":"/health","latency":"16ms","status":"passed"}

{"timestamp":"Tue Jan 10 09:17:16 2023","host":"lb1.nimbus.dev:5678","target":"web1","path":"/health","latency":"19ms","status":"passed"}

{"timestamp":"Tue Jan 10 09:17:18 2023","host":"lb2.nimbus.dev:5678","target":"web2","path":"/health","latency":"41ms","status":"passed"}

{"timestamp":"Tue Jan 10 09:17:22 2023","host":"lb3.nimbus.dev:5678","target":"web3","path":"/health","latency":"32ms","status":"passed"}

{"timestamp":"Tue Jan 10 09:17:25 2023","host":"lb1.nimbus.dev:5678","target":"web4","path":"/health","latency":"27ms","status":"passed"}

{"timestamp":"Tue Jan 10 09:17:28 2023","host":"lb2.nimbus.dev:5678","target":"web5","path":"/health","latency":"62ms","status":"passed"}

// ... more logs

These are the same logs after applying LLA based on host and status:

{"host":"lb1.nimbus.dev:5678","status":"passed","path":"/health","data":[{"target":"web1","time":"23ms"},{"target":"web4","time":"14ms"},{"target":"web1","time":"19ms"},{"target":"web4","time":"27ms"}, ...], "size":700, "timestamp":"Tue Jan 10 09:15:16 2023","timestamp_end":"Tue Jan 10 09:17:28 2023"}

{"host":"lb1.nimbus.dev:5678","status":"passed","path":"/health","data":[{"target":"web1","time":"23ms"},{"target":"web4","time":"14ms"},{"target":"web1","time":"19ms"},{"target":"web4","time":"27ms"},...], "size": 700, "timestamp":"Tue Jan 10 09:15:16 2023","timestamp_end":"Tue Jan 10 09:17:28 2023"}

{"host":"lb2.nimbus.dev:5678","status":"passed","path":"/health","data":[{"target":"web2","time":"57ms"},{"target":"web5","time":"38ms"},{"target":"web2","time":"41ms"},{"target":"web5","time":"62ms"},...], "size": 700, "timestamp":"Tue Jan 10 09:15:18 2023","timestamp_end":"Tue Jan 10 09:17:28 2023"}

{"host":"lb3.nimbus.dev:5678","status":"passed","path":"/health","data":[{"target":"web3","time":"101ms"},{"target":"web3","time":"16ms"},{"target":"web3","time":"32ms"},...],"size": 700,"timestamp":"Tue Jan 10 09:15:22 2023","timestamp_end":"Tue Jan 10 09:17:22 2023"}

// ... more logs

Some things to note:

-

a new attribute,

size, has been added to indicate the number of logs aggregated -

a new field,

timestamp_end, has been introduced to mark the time of the last log in the aggregation -

a new field,

data, holds an array of the unique info of each log

For the above example, we’ve managed a 77% reduction in log size and a 99% reduction in log volume.

LLA can be implemented in an observability pipeline – these are data pipelines that are optimized for receiving, transforming, and sending observability data. Popular open-source pipeline solutions include the OTEL Collector and Vector.

To configure a pipeline for LLA, you’ll need to identify log groups, create forwarding rules, normalize log data before and after aggregation, as well as perform the aggregation itself over the correct fields.

You also need to pay attention to vendor-specific details – for example, Datadog has limits for maximum content size, array size, and the size of a single log. This means you need to be mindful of not exceeding any of these quotas when aggregating datadog logs or risk losing data.

Finally, as the pipeline itself is now part of your observability stack, you will need to operationalize it and make sure it can scale to handle traffic from all your services.

If you like the idea of LLA but not necessarily the toil, consider trying Nimbus. Nimbus is the first observability pipeline that automatically analyzes log traffic and can identify high-volume log groups as well as come up with LLAs to reduce their volume. On average, organizations save 60% off their logging costs within the first month of use.

It’s 2024, and many companies find themselves at odds with their observability vendor – they want to ship all the logs necessary to provide a reliable service but cannot justify the expense to do so.

Like Duke Thomas, these organizations find themselves having to make the painful tradeoff of needing to part with something they need versus paying for something they cannot in good conscience afford.

But unlike in the Duke’s time, there’s a third choice – lossless log aggregations. Sometimes you don’t have to choose, sometimes you can have your cake and eat it too!

Keep your files stored safely and securely with the SanDisk 2TB Extreme Portable SSD. With over 69,505 ratings and an impressive 4.6 out of 5 stars, this product has been purchased over 8K+ times in the past month. At only $129.99, this Amazon’s Choice product is a must-have for secure file storage.

Help keep private content private with the included password protection featuring 256-bit AES hardware encryption. Order now for just $129.99 on Amazon!

Support Techcratic

If you find value in Techcratic’s insights and articles, consider supporting us with Bitcoin. Your support helps me, as a solo operator, continue delivering high-quality content while managing all the technical aspects, from server maintenance to blog writing, future updates, and improvements. Support Innovation! Thank you.

Bitcoin Address:

bc1qlszw7elx2qahjwvaryh0tkgg8y68enw30gpvge

Please verify this address before sending funds.

Bitcoin QR Code

Simply scan the QR code below to support Techcratic.

Please read the Privacy and Security Disclaimer on how Techcratic handles your support.

Disclaimer: As an Amazon Associate, Techcratic may earn from qualifying purchases.

![for Tesla Phone Mount, [Strongest Magnet] Magnetic Car Mount for MagSafe, for Tesla…](https://techcratic.com/wp-content/uploads/2025/09/81833Vq2jzL._AC_SL1500_-360x180.jpg)