Erik Pounds

2025-01-30 18:30:00

blogs.nvidia.com

DeepSeek-R1 is an open model with state-of-the-art reasoning capabilities. Instead of offering direct responses, reasoning models like DeepSeek-R1 perform multiple inference passes over a query, conducting chain-of-thought, consensus and search methods to generate the best answer.

Performing this sequence of inference passes — using reason to arrive at the best answer — is known as test-time scaling. DeepSeek-R1 is a perfect example of this scaling law, demonstrating why accelerated computing is critical for the demands of agentic AI inference.

As models are allowed to iteratively “think” through the problem, they create more output tokens and longer generation cycles, so model quality continues to scale. Significant test-time compute is critical to enable both real-time inference and higher-quality responses from reasoning models like DeepSeek-R1, requiring larger inference deployments.

R1 delivers leading accuracy for tasks demanding logical inference, reasoning, math, coding and language understanding while also delivering high inference efficiency.

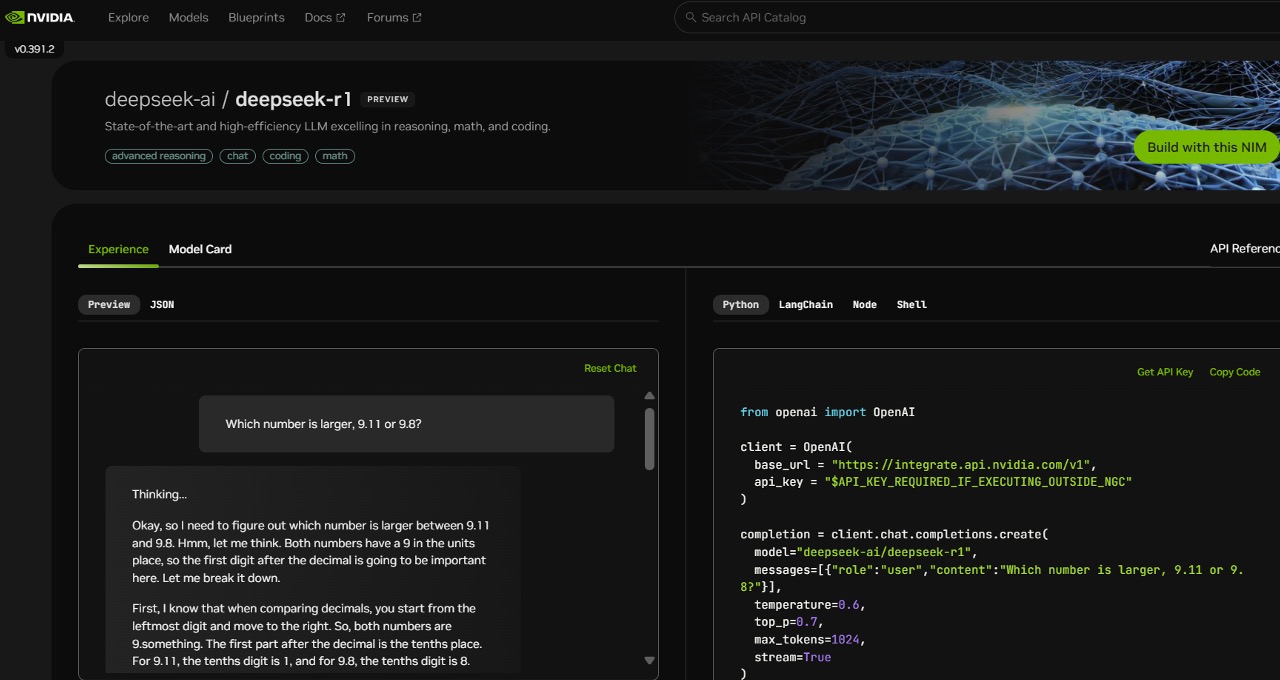

To help developers securely experiment with these capabilities and build their own specialized agents, the 671-billion-parameter DeepSeek-R1 model is now available as an NVIDIA NIM microservice preview on build.nvidia.com. The DeepSeek-R1 NIM microservice can deliver up to 3,872 tokens per second on a single NVIDIA HGX H200 system.

Developers can test and experiment with the application programming interface (API), which is expected to be available soon as a downloadable NIM microservice, part of the NVIDIA AI Enterprise software platform.

The DeepSeek-R1 NIM microservice simplifies deployments with support for industry-standard APIs. Enterprises can maximize security and data privacy by running the NIM microservice on their preferred accelerated computing infrastructure. Using NVIDIA AI Foundry with NVIDIA NeMo software, enterprises will also be able to create customized DeepSeek-R1 NIM microservices for specialized AI agents.

DeepSeek-R1 — a Perfect Example of Test-Time Scaling

DeepSeek-R1 is a large mixture-of-experts (MoE) model. It incorporates an impressive 671 billion parameters — 10x more than many other popular open-source LLMs — supporting a large input context length of 128,000 tokens. The model also uses an extreme number of experts per layer. Each layer of R1 has 256 experts, with each token routed to eight separate experts in parallel for evaluation.

Delivering real-time answers for R1 requires many GPUs with high compute performance, connected with high-bandwidth and low-latency communication to route prompt tokens to all the experts for inference. Combined with the software optimizations available in the NVIDIA NIM microservice, a single server with eight H200 GPUs connected using NVLink and NVLink Switch can run the full, 671-billion-parameter DeepSeek-R1 model at up to 3,872 tokens per second. This throughput is made possible by using the NVIDIA Hopper architecture’s FP8 Transformer Engine at every layer — and the 900 GB/s of NVLink bandwidth for MoE expert communication.

Getting every floating point operation per second (FLOPS) of performance out of a GPU is critical for real-time inference. The next-generation NVIDIA Blackwell architecture will give test-time scaling on reasoning models like DeepSeek-R1 a giant boost with fifth-generation Tensor Cores that can deliver up to 20 petaflops of peak FP4 compute performance and a 72-GPU NVLink domain specifically optimized for inference.

Get Started Now With the DeepSeek-R1 NIM Microservice

Developers can experience the DeepSeek-R1 NIM microservice, now available on build.nvidia.com. Watch how it works:

With NVIDIA NIM, enterprises can deploy DeepSeek-R1 with ease and ensure they get the high efficiency needed for agentic AI systems.

See notice regarding software product information.

Take your gaming to the next level! The Redragon S101 RGB Backlit Gaming Keyboard is an Amazon’s Choice product that delivers incredible value. This all-in-one PC Gamer Value Kit includes a Programmable Backlit Gaming Mouse, perfect for competitive gaming or casual use.

With 46,015 ratings, an average of 4.6 out of 5 stars, and over 4K+ bought in the past month, this kit is trusted by gamers everywhere! Available now for just $39.99 on Amazon. Plus, act fast and snag an exclusive 15% off coupon – but hurry, this offer won’t last long!

Help Power Techcratic’s Future – Scan To Support

If Techcratic’s content and insights have helped you, consider giving back by supporting the platform with crypto. Every contribution makes a difference, whether it’s for high-quality content, server maintenance, or future updates. Techcratic is constantly evolving, and your support helps drive that progress.

As a solo operator who wears all the hats, creating content, managing the tech, and running the site, your support allows me to stay focused on delivering valuable resources. Your support keeps everything running smoothly and enables me to continue creating the content you love. I’m deeply grateful for your support, it truly means the world to me! Thank you!

|

BITCOIN

bc1qlszw7elx2qahjwvaryh0tkgg8y68enw30gpvge Scan the QR code with your crypto wallet app |

|

DOGECOIN

D64GwvvYQxFXYyan3oQCrmWfidf6T3JpBA Scan the QR code with your crypto wallet app |

|

ETHEREUM

0xe9BC980DF3d985730dA827996B43E4A62CCBAA7a Scan the QR code with your crypto wallet app |

Please read the Privacy and Security Disclaimer on how Techcratic handles your support.

Disclaimer: As an Amazon Associate, Techcratic may earn from qualifying purchases.

![The Legend of Zelda: Breath of the Wild – Dunba Taag Shrine Walkthrough [HD 1080P]](https://techcratic.com/wp-content/uploads/2025/01/1738304029_maxresdefault-75x75.jpg)