Divya

2025-02-28 00:09:00

gbhackers.com

A sweeping analysis of the Common Crawl dataset—a cornerstone of training data for large language models (LLMs) like DeepSeek—has uncovered 11,908 live API keys, passwords, and credentials embedded in publicly accessible web pages.

The leaked secrets, which authenticate successfully with services ranging from AWS to Slack and Mailchimp, highlight systemic risks in AI development pipelines as models inadvertently learn insecure coding practices from exposed data.

Researchers at Truffle Security traced the root cause to widespread credential hardcoding across 2.76 million web pages archived in the December 2024 Common Crawl snapshot, raising urgent questions about safeguards for AI-generated code.

The Anatomy of the DeepSeek Training Data Exposure

The Common Crawl dataset, a 400-terabyte repository of web content scraped from 2.67 billion pages, serves as foundational training material for DeepSeek and other leading LLMs.

When Truffle Security scanned this corpus using its open-source TruffleHog tool, it discovered not only thousands of valid credentials but troubling reuse patterns.

For instance, a single WalkScore API key appeared 57,029 times across 1,871 subdomains, while one webpage contained 17 unique Slack webhooks hardcoded into front-end JavaScript.

Mailchimp API keys dominated the leak, with 1,500 unique keys enabling potential phishing campaigns and data theft.

Infrastructure at Scale: Scanning 90,000 Web Archives

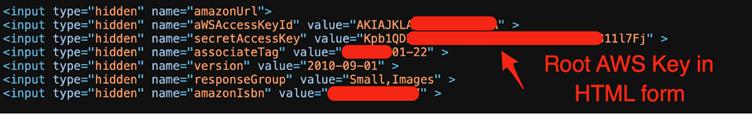

To process Common Crawl’s 90,000 WARC (Web ARChive) files, Truffle Security deployed a distributed system across 20 high-performance servers.

Each node downloaded 4GB compressed files, split them into individual web records, and ran TruffleHog to detect and verify live secrets.

To quantify real-world risks, the team prioritized verified credentials—keys that actively authenticated with their respective services.

Notably, 63% of secrets were reused across multiple sites, amplifying breach potential.

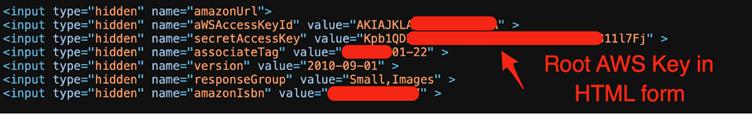

This technical feat revealed startling cases like an AWS root key embedded in front-end HTML for S3 Basic Authentication—a practice with no functional benefit but grave security implications.

Researchers also identified software firms recycling API keys across client sites, inadvertently exposing customer lists.

Why LLMs Like DeepSeek Amplify the Threat

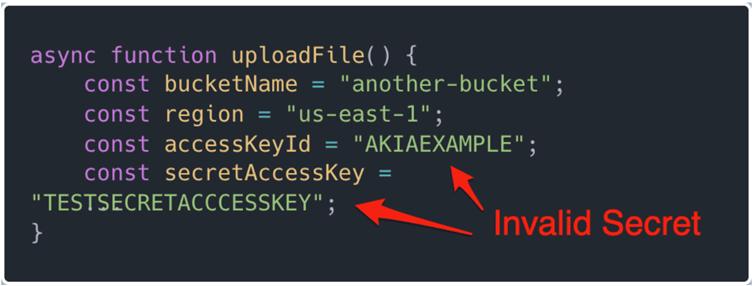

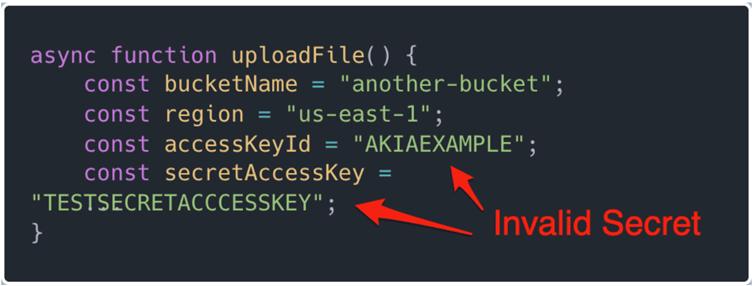

While Common Crawl’s data reflects broader internet security failures, integrating these examples into LLM training sets creates a feedback loop.

Models cannot distinguish between live keys and placeholder examples during training, normalizing insecure patterns like credential hardcoding.

This issue gained attention last month when researchers observed LLMs repeatedly instructing developers to embed secrets directly into code—a practice traceable to flawed training examples.

The Verification Gap in AI-Generated Code

Truffle Security’s findings underscore a critical blind spot: even if 99% of detected secrets were invalid, their sheer volume of training data skews LLM outputs toward insecure recommendations.

For instance, a model exposed to thousands of front-end Mailchimp API keys may prioritize convenience over security, ignoring backend environment variables.

This problem persists across all major LLM training datasets derived from public code repositories and web content.

Industry Responses and Mitigation Strategies

In response, Truffle Security advocates for multilayered safeguards. Developers using AI coding assistants can implement Copilot Instructions or Cursor Rules to inject security guardrails into LLM prompts.

For example, a rule specifying “Never suggest hardcoded credentials” steers models toward secure alternatives.

On an industry level, researchers propose techniques like Constitutional AI to embed ethical constraints directly into model behavior, reducing harmful outputs.

However, this requires collaboration between AI developers and cybersecurity experts to audit training data and implement robust redaction pipelines.

This incident underscores the need for proactive measures:

- Expand secret scanning to public datasets like Common Crawl and GitHub.

- Reevaluate AI training pipelines to filter or anonymize sensitive data.

- Enhance developer education on secure credential management.

As LLMs like DeepSeek become integral to software development, securing their training ecosystems isn’t optional—it’s existential.

The 12,000 leaked keys are merely a symptom of a deeper ailment: our collective failure to sanitize the data shaping tomorrow’s AI.

Collect Threat Intelligence on the Latest Malware and Phishing Attacks with ANY.RUN TI Lookup -> Try for free

Keep your files stored safely and securely with the SanDisk 2TB Extreme Portable SSD. With over 69,505 ratings and an impressive 4.6 out of 5 stars, this product has been purchased over 8K+ times in the past month. At only $129.99, this Amazon’s Choice product is a must-have for secure file storage.

Help keep private content private with the included password protection featuring 256-bit AES hardware encryption. Order now for just $129.99 on Amazon!

Help Power Techcratic’s Future – Scan To Support

If Techcratic’s content and insights have helped you, consider giving back by supporting the platform with crypto. Every contribution makes a difference, whether it’s for high-quality content, server maintenance, or future updates. Techcratic is constantly evolving, and your support helps drive that progress.

As a solo operator who wears all the hats, creating content, managing the tech, and running the site, your support allows me to stay focused on delivering valuable resources. Your support keeps everything running smoothly and enables me to continue creating the content you love. I’m deeply grateful for your support, it truly means the world to me! Thank you!

|

BITCOIN

bc1qlszw7elx2qahjwvaryh0tkgg8y68enw30gpvge Scan the QR code with your crypto wallet app |

|

DOGECOIN

D64GwvvYQxFXYyan3oQCrmWfidf6T3JpBA Scan the QR code with your crypto wallet app |

|

ETHEREUM

0xe9BC980DF3d985730dA827996B43E4A62CCBAA7a Scan the QR code with your crypto wallet app |

Please read the Privacy and Security Disclaimer on how Techcratic handles your support.

Disclaimer: As an Amazon Associate, Techcratic may earn from qualifying purchases.

![SABRENT [3-Pack 22AWG Premium 6ft USB-C to USB A 3.0 Sync and Charge Cables [Black]…](https://techcratic.com/wp-content/uploads/2025/08/81SQ13LNwfL._SL1500_-360x180.jpg)