Kayla Zhu

2025-04-25 10:44:00

www.visualcapitalist.com

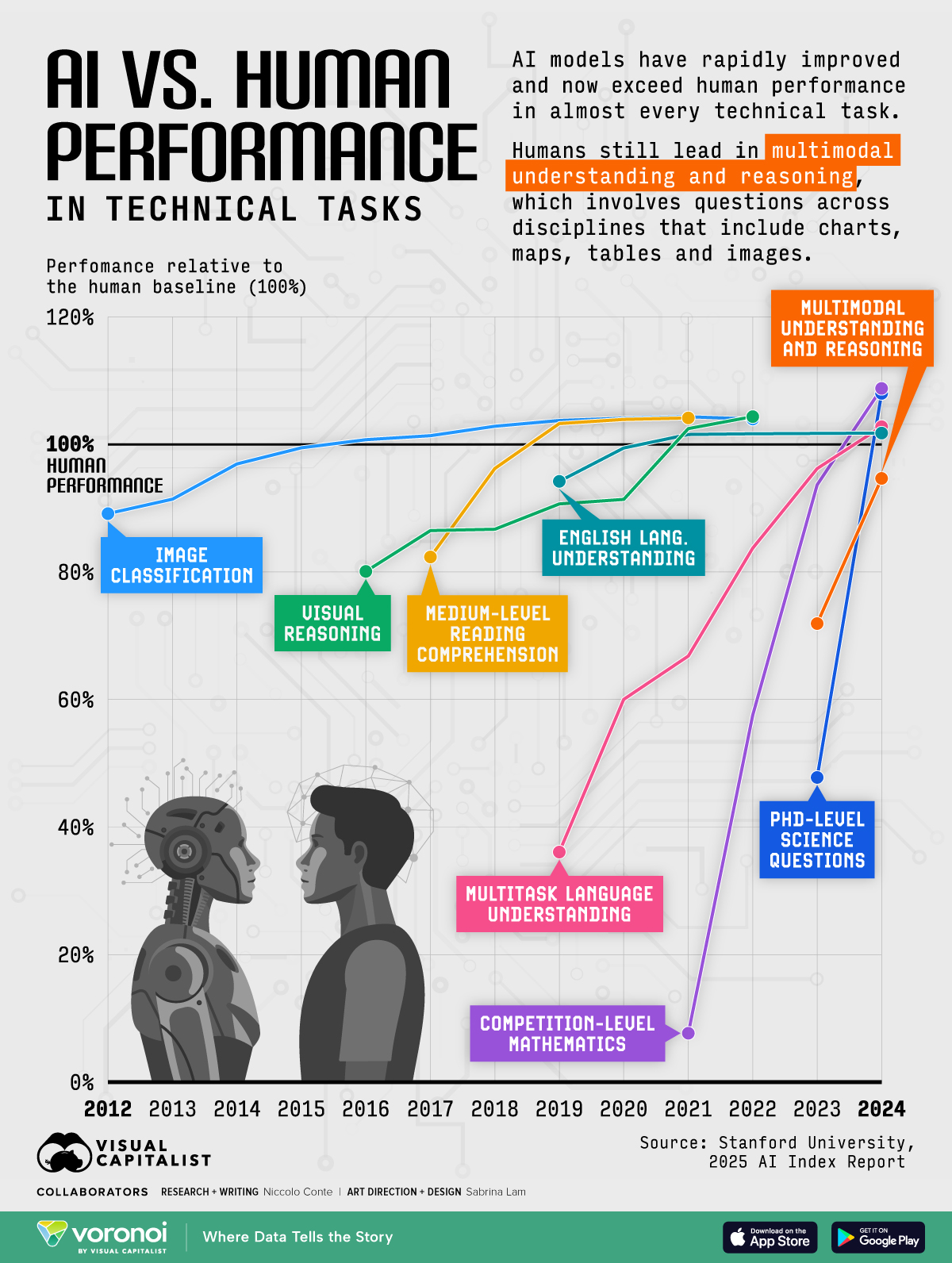

AI vs. Human Performance in Technical Tasks

This was originally posted on our Voronoi app. Download the app for free on iOS or Android and discover incredible data-driven charts from a variety of trusted sources.

The gap between human and machine reasoning is narrowing—and fast.

Over the past year, AI systems have continued to see rapid advancements, surpassing human performance in technical tasks where they previously fell short, such as advanced math and visual reasoning.

This graphic visualizes AI systems’ performance relative to human baselines for eight AI benchmarks measuring tasks including:

- Image classification

- Visual reasoning

- Medium-level reading comprehension

- English language understanding

- Multitask language understanding

- Competition-level mathematics

- PhD-level science questions

- Multimodal understanding and reasoning

This visualization is part of Visual Capitalist’s AI Week, sponsored by Terzo. Data comes from the Stanford University 2025 AI Index Report.

An AI benchmark is a standardized test used to evaluate the performance and capabilities of AI systems on specific tasks.

AI Models Are Surpassing Humans in Technical Tasks

Below, we show how AI models have performed relative to the human baseline in various technical tasks in recent years.

| Year | Perfomance relative to the human baseline (100%) | Task |

|---|---|---|

| 2012 | 89.15% | Image classification |

| 2013 | 91.42% | Image classification |

| 2014 | 96.94% | Image classification |

| 2015 | 99.47% | Image classification |

| 2016 | 100.74% | Image classification |

| 2016 | 80.09% | Visual reasoning |

| 2017 | 101.37% | Image classification |

| 2017 | 82.35% | Medium-level reading comprehension |

| 2017 | 86.49% | Visual reasoning |

| 2018 | 102.85% | Image classification |

| 2018 | 96.23% | Medium-level reading comprehension |

| 2018 | 86.70% | Visual reasoning |

| 2019 | 103.75% | Image classification |

| 2019 | 36.08% | Multitask language understanding |

| 2019 | 103.27% | Medium-level reading comprehension |

| 2019 | 94.21% | English language understanding |

| 2019 | 90.67% | Visual reasoning |

| 2020 | 104.11% | Image classification |

| 2020 | 60.02% | Multitask language understanding |

| 2020 | 103.92% | Medium-level reading comprehension |

| 2020 | 99.44% | English language understanding |

| 2020 | 91.38% | Visual reasoning |

| 2021 | 104.34% | Image classification |

| 2021 | 7.67% | Competition-level mathematics |

| 2021 | 66.82% | Multitask language understanding |

| 2021 | 104.15% | Medium-level reading comprehension |

| 2021 | 101.56% | English language understanding |

| 2021 | 102.48% | Visual reasoning |

| 2022 | 103.98% | Image classification |

| 2022 | 57.56% | Competition-level mathematics |

| 2022 | 83.74% | Multitask language understanding |

| 2022 | 101.67% | English language understanding |

| 2022 | 104.36% | Visual reasoning |

| 2023 | 47.78% | PhD-level science questions |

| 2023 | 93.67% | Competition-level mathematics |

| 2023 | 96.21% | Multitask language understanding |

| 2023 | 71.91% | Multimodal understanding and reasoning |

| 2024 | 108.00% | PhD-level science questions |

| 2024 | 108.78% | Competition-level mathematics |

| 2024 | 102.78% | Multitask language understanding |

| 2024 | 94.67% | Multimodal understanding and reasoning |

| 2024 | 101.78% | English language understanding |

From ChatGPT to Gemini, many of the world’s leading AI models are surpassing the human baseline in a range of technical tasks.

The only task where AI systems still haven’t caught up to humans is multimodal understanding and reasoning, which involves processing and reasoning across multiple formats and disciplines, such as images, charts, and diagrams.

However, the gap is closing quickly.

In 2024, OpenAI’s o1 model scored 78.2% on MMMU, a benchmark that evaluates models on multi-discipline tasks demanding college-level subject knowledge.

This was just 4.4 percentage points below the human benchmark of 82.6%. The o1 model also has one of the lowest hallucination rates out of all AI models.

This was major jump from the end of 2023, where Google Gemini scored just 59.4%, highlighting the rapid improvement of AI performance in these technical tasks.

To dive into all the AI Week content, visit our AI content hub, brought to you by Terzo.

Learn More on the Voronoi App

To learn more about the global AI industry, check out this graphic that visualizes which countries are winning the AI patent race.

Keep your files stored safely and securely with the SanDisk 2TB Extreme Portable SSD. With over 69,505 ratings and an impressive 4.6 out of 5 stars, this product has been purchased over 8K+ times in the past month. At only $129.99, this Amazon’s Choice product is a must-have for secure file storage.

Help keep private content private with the included password protection featuring 256-bit AES hardware encryption. Order now for just $129.99 on Amazon!

Help Power Techcratic’s Future – Scan To Support

If Techcratic’s content and insights have helped you, consider giving back by supporting the platform with crypto. Every contribution makes a difference, whether it’s for high-quality content, server maintenance, or future updates. Techcratic is constantly evolving, and your support helps drive that progress.

As a solo operator who wears all the hats, creating content, managing the tech, and running the site, your support allows me to stay focused on delivering valuable resources. Your support keeps everything running smoothly and enables me to continue creating the content you love. I’m deeply grateful for your support, it truly means the world to me! Thank you!

|

BITCOIN

bc1qlszw7elx2qahjwvaryh0tkgg8y68enw30gpvge Scan the QR code with your crypto wallet app |

|

DOGECOIN

D64GwvvYQxFXYyan3oQCrmWfidf6T3JpBA Scan the QR code with your crypto wallet app |

|

ETHEREUM

0xe9BC980DF3d985730dA827996B43E4A62CCBAA7a Scan the QR code with your crypto wallet app |

Please read the Privacy and Security Disclaimer on how Techcratic handles your support.

Disclaimer: As an Amazon Associate, Techcratic may earn from qualifying purchases.

![[Download] Intuitive 3D Modeling | Abstract Sculpture | FLIGHT | DANA KRYSTLE](https://techcratic.com/wp-content/uploads/2025/08/1755630966_maxresdefault-360x180.jpg)

![[DEBUT COVER] Intergalactic Bound – Yunosuke / CircusP [MIKU EXPO 10th]](https://techcratic.com/wp-content/uploads/2025/08/1755598927_maxresdefault-360x180.jpg)