Kyt Dotson

2025-05-13 12:00:00

siliconangle.com

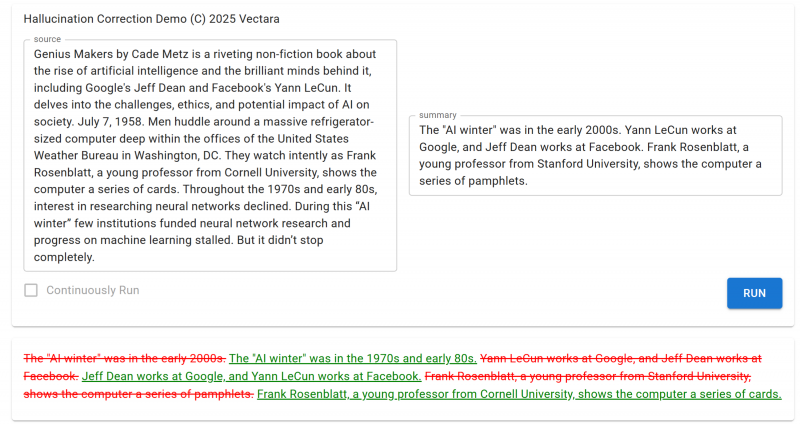

Artificial intelligence agent and assistant platform provider Vectara Inc. today announced the launch of a new Hallucination Corrector directly integrated into its service, designed to detect and mitigate costly, unreliable responses from enterprise AI models.

Hallucinations, when generative AI large language models confidently provide false information, have long plagued the industry. For traditional models, it’s estimated that they occur at a rate of about 3% to 10% of queries on average, depending on the model.

The recent advent of reasoning AI models, which break down complex questions into step-by-step solutions to “think” through them, has led to a noted increase in the hallucination rate.

According to a report from Vectara, DeepSeek-R1, a reasoning model, hallucinates significantly more at 14.3% than its predecessor DeepSeek R3 at 3.9%. Similarly, OpenAI’s GPT-o1, also a reasoning model, jumped to a 2.4% rate from GPT-4o at 1.5%. New Scientist published a similar report and found even higher rates with the same and other reasoning models.

“While LLMs have recently made significant progress in addressing the issue of hallucinations, they still fall distressingly short of the standards for accuracy that are required in highly regulated industries like financial services, healthcare, law and many others,” said Vectara founder and Chief Executive Amr Awadallah.

In its initial testing, Vectara said the Hallucination Corrector reduced hallucination rates in enterprise AI systems to about 0.9%.

It works in conjunction with the company’s already widely used Hughes Hallucination Evaluation Model, which provides a way to compare responses to source documents and identify if statements are accurate at runtime.

The HHEM scores the answer against the source with a probability score between 1 and 0, where 0 means completely inaccurate – a total hallucination – and 1 for perfect accuracy. For example, a 0.98 means that there’s a 98% chance the answer is likely highly accurate. HHEM is available on Hugging Face and received over 250,000 downloads last month, making it one of the most popular hallucination detectors on the platform.

In the case of a factually inconsistent response, the Corrector provides a detailed output including an explanation of why the statement is a hallucination and a corrected version incorporating minimal changes for accuracy.

The company said it automatically uses the corrected output in summaries for end-users, but experts can use the full explanation and suggested fixes for testing applications to refine or fine-tune their models and guardrails to combat hallucinations. It can also show the original summary, but use corrections info to flag potential uses while offering the corrected summary as an optional fix.

In the case of LLM answers that fall into the category of misleading but not quite outright false, the Hallucination Corrector can work to refine the response to reduce its uncertainty core according to the customer’s settings.

Images: geralt/Pixabay, Vectara

Your vote of support is important to us and it helps us keep the content FREE.

One click below supports our mission to provide free, deep, and relevant content.

Join our community on YouTube

Join the community that includes more than 15,000 #CubeAlumni experts, including Amazon.com CEO Andy Jassy, Dell Technologies founder and CEO Michael Dell, Intel CEO Pat Gelsinger, and many more luminaries and experts.

THANK YOU

Enjoy the perfect blend of retro charm and modern convenience with the Udreamer Vinyl Record Player. With 9,041 ratings, a 4.3/5-star average, and 400+ units sold in the past month, this player is a fan favorite, available now for just $39.99.

The record player features built-in stereo speakers that deliver retro-style sound while also offering modern functionality. Pair it with your phone via Bluetooth to wirelessly listen to your favorite tracks. Udreamer also provides 24-hour one-on-one service for customer support, ensuring your satisfaction.

Don’t miss out—get yours today for only $39.99 at Amazon!

Help Power Techcratic’s Future – Scan To Support

If Techcratic’s content and insights have helped you, consider giving back by supporting the platform with crypto. Every contribution makes a difference, whether it’s for high-quality content, server maintenance, or future updates. Techcratic is constantly evolving, and your support helps drive that progress.

As a solo operator who wears all the hats, creating content, managing the tech, and running the site, your support allows me to stay focused on delivering valuable resources. Your support keeps everything running smoothly and enables me to continue creating the content you love. I’m deeply grateful for your support, it truly means the world to me! Thank you!

|

BITCOIN

bc1qlszw7elx2qahjwvaryh0tkgg8y68enw30gpvge Scan the QR code with your crypto wallet app |

|

DOGECOIN

D64GwvvYQxFXYyan3oQCrmWfidf6T3JpBA Scan the QR code with your crypto wallet app |

|

ETHEREUM

0xe9BC980DF3d985730dA827996B43E4A62CCBAA7a Scan the QR code with your crypto wallet app |

Please read the Privacy and Security Disclaimer on how Techcratic handles your support.

Disclaimer: As an Amazon Associate, Techcratic may earn from qualifying purchases.

![TouchDesigner tutorial[RealseseCamera][Particle][InteractiveArt]](https://techcratic.com/wp-content/uploads/2025/08/1755986049_maxresdefault-360x180.jpg)