2025-07-22 17:12:00

qwenlm.github.io

GITHUB

HUGGING FACE

MODELSCOPE

DISCORD

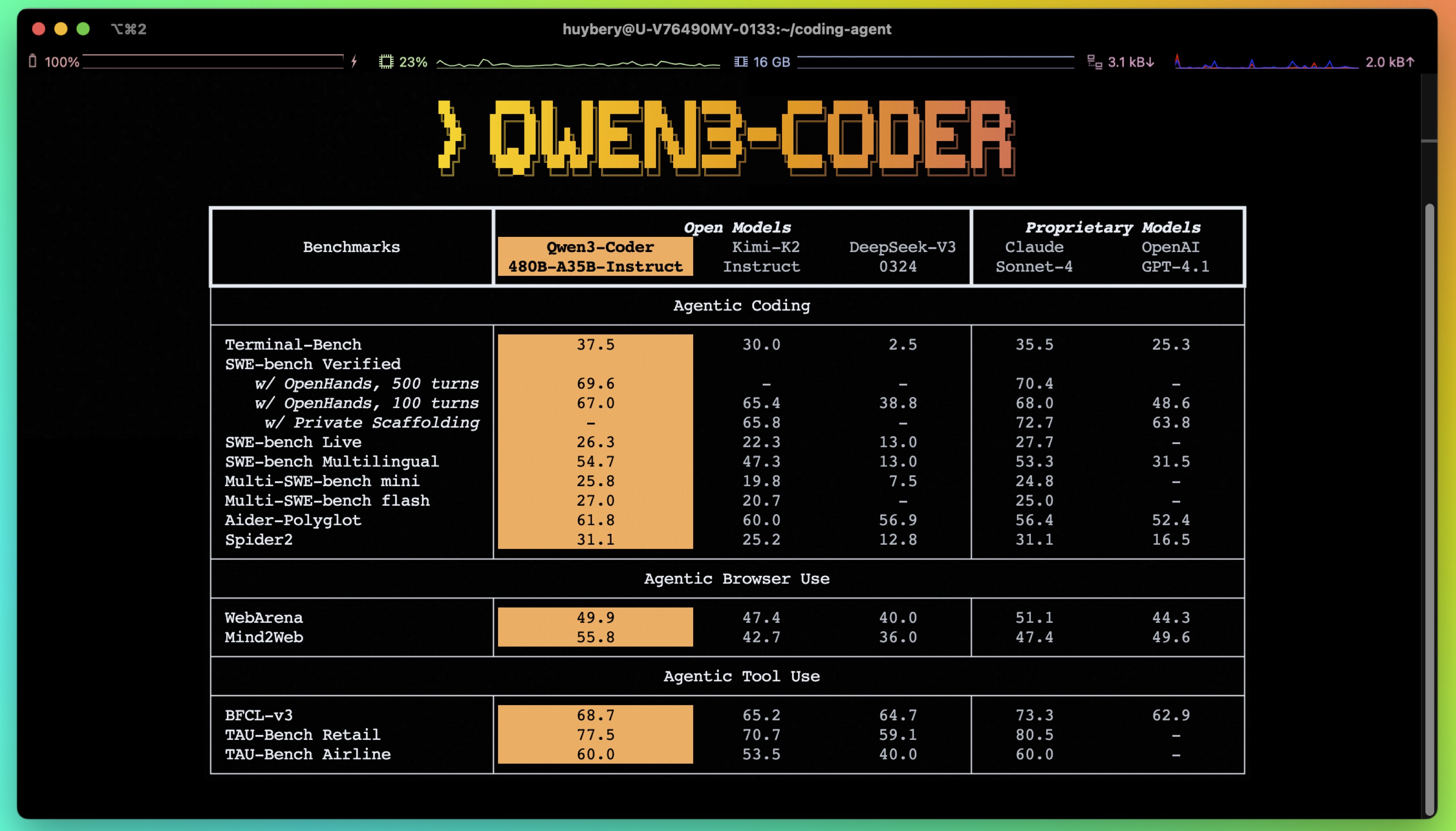

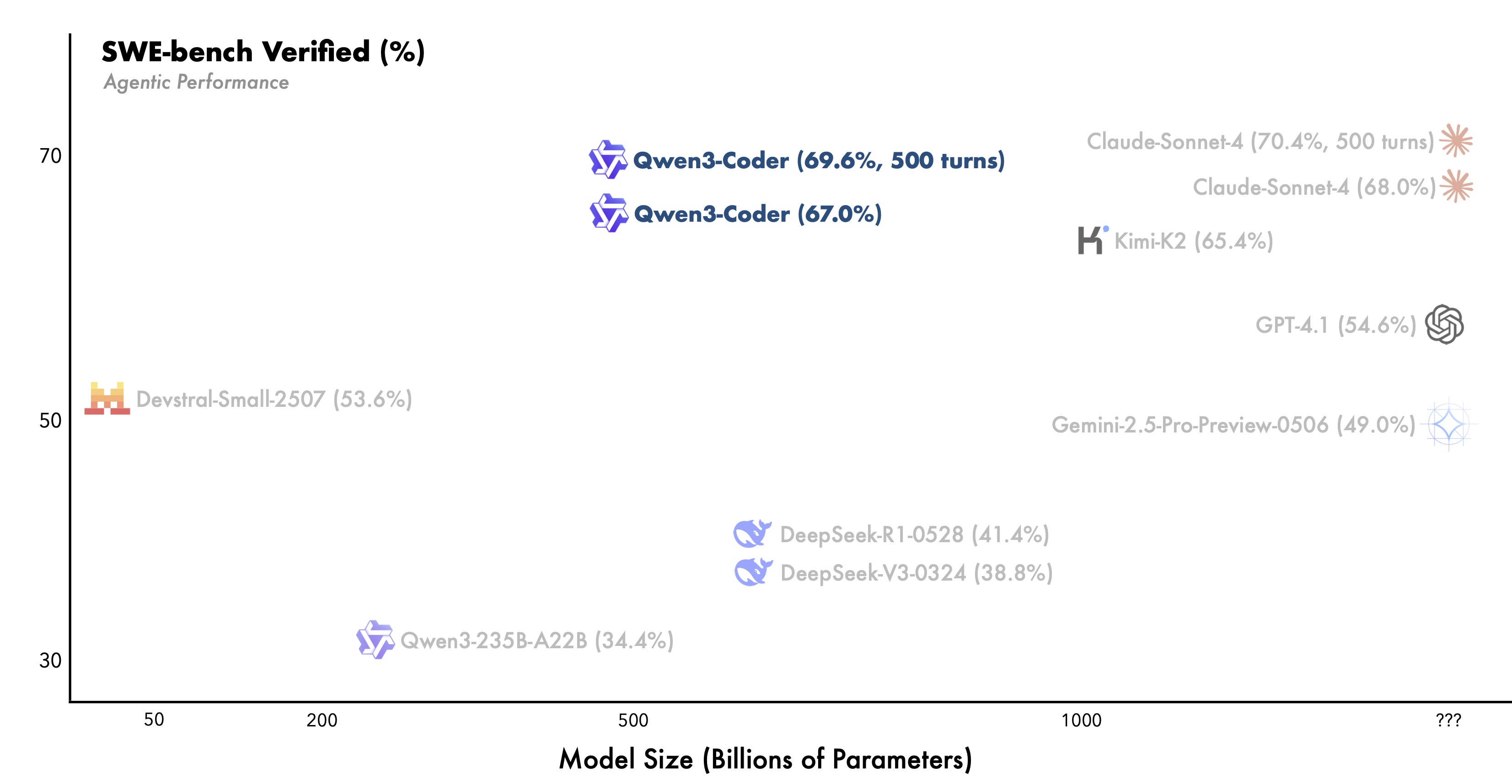

Today, we’re announcing Qwen3-Coder, our most agentic code model to date. Qwen3-Coder is available in multiple sizes, but we’re excited to introduce its most powerful variant first: Qwen3-Coder-480B-A35B-Instruct — a 480B-parameter Mixture-of-Experts model with 35B active parameters which supports the context length of 256K tokens natively and 1M tokens with extrapolation methods, offering exceptional performance in both coding and agentic tasks. Qwen3-Coder-480B-A35B-Instruct sets new state-of-the-art results among open models on Agentic Coding, Agentic Browser-Use, and Agentic Tool-Use, comparable to Claude Sonnet 4.

Alongside the model, we’re also open-sourcing a command-line tool for agentic coding: Qwen Code. Forked from Gemini Code, Qwen Code has been adapted with customized prompts and function calling protocols to fully unleash the capabilities of Qwen3-Coder on agentic coding tasks. Qwen3-Coder works seamlessly with the community’s best developer tools. As a foundation model, we hope it can be used anywhere across the digital world — Agentic Coding in the World!

Qwen3-Coder

Pre-Training

There’s still room to scale in pretraining—and with Qwen3-Coder, we’re advancing along multiple dimensions to strengthen the model’s core capabilities:

- Scaling Tokens: 7.5T tokens (70% code ratio), excelling in coding while preserving general and math abilities.

- Scaling Context: Natively supports 256K context and can be extended up to 1M with YaRN, optimized for repo-scale and dynamic data (e.g., Pull Requests) to empower Agentic Coding.

- Scaling Synthetic Data: Leveraged Qwen2.5-Coder to clean and rewrite noisy data, significantly improving overall data quality.

Post-Training

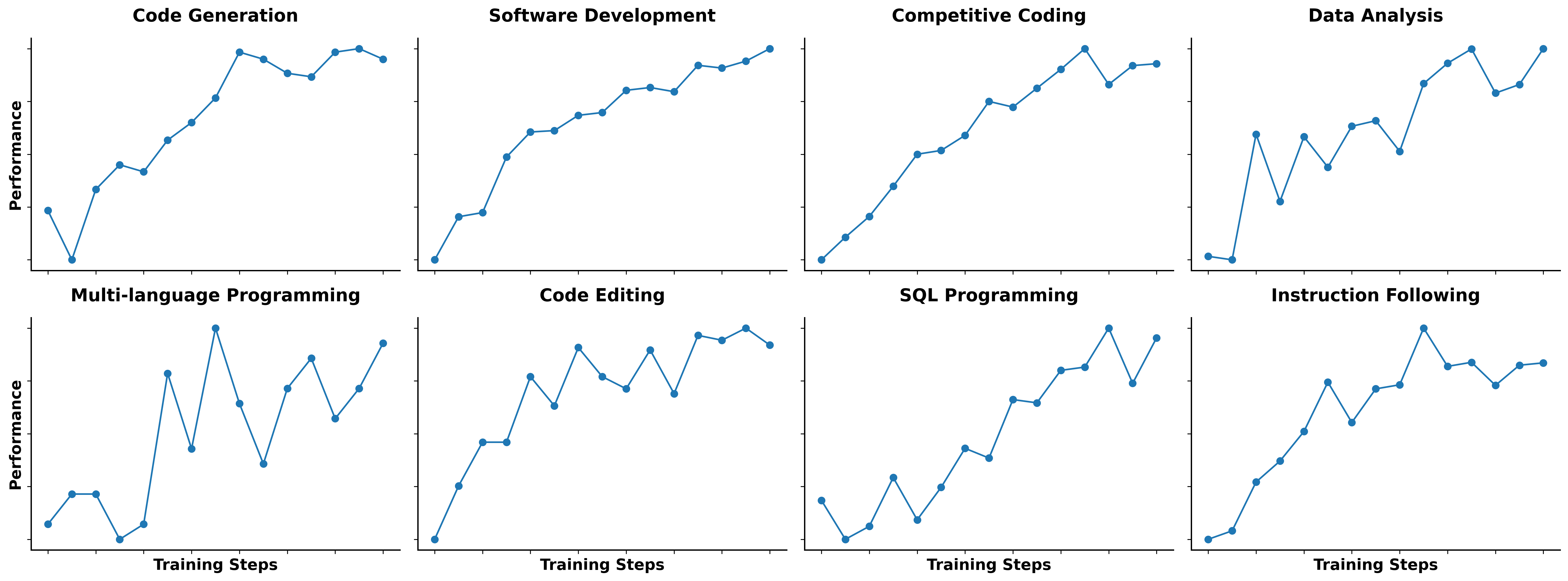

Scaling Code RL: Hard to Solve, Easy to Verify

Unlike the prevailing focus on competition-level code generation in the community, we believe all code tasks are naturally well-suited for execution-driven large-scale reinforcement learning. That’s why we scaled up Code RL training on a broader set of real-world coding tasks. By automatically scaling test cases of diversity coding tasks, we created high-quality training instances and successfully unlocked the full potential of reinforcement learning. It not only significantly boosted code execution success rates, but also brought gains to other tasks. This encourages us to keep exploring hard-to-solve, easy-to-verify tasks as fertile ground for large-scale reinforcement learning.

Scaling Long-Horizon RL

In real-world software engineering tasks like SWE-Bench, Qwen3-Coder must engage in multi-turn interaction with the environment, involving planning, using tools, receiving feedback, and making decisions. In the post-training phase of Qwen3-Coder, we introduced long-horizon RL (Agent RL) to encourage the model to solve real-world tasks through multi-turn interactions using tools. The key challenge of Agent RL lies in environment scaling. To address this, we built a scalable system capable of running 20,000 independent environments in parallel, leveraging Alibaba Cloud’s infrastructure. The infrastructure provides the necessary feedback for large-scale reinforcement learning and supports evaluation at scale. As a result, Qwen3-Coder achieves state-of-the-art performance among open-source models on SWE-Bench Verified without test-time scaling.

Code with Qwen3-Coder

Qwen Code

Qwen Code is a research-purpose CLI tool adapted from Gemini CLI, with enhanced parser and tool support for Qwen-Coder models.

Make sure you have installed nodejs 20+:

You could install it via the following commands:

curl -qL https://www.npmjs.com/install.sh | sh

Then install Qwen code via npm manager:

npm i -g @qwen-code/qwen-code

The other way is to install from the source:

git clone https://github.com/QwenLM/qwen-code.git cd qwen-code && npm install && npm install -g

Qwen Code supports the OpenAI SDK when calling LLMs, and you can export the following environment variables or simply put them under the .envfile.

export OPENAI_API_KEY="your_api_key_here"

export OPENAI_BASE_URL="https://dashscope-intl.aliyuncs.com/compatible-mode/v1"

export OPENAI_MODEL="qwen3-coder-plus"

Now enjoy your vibe coding with Qwen-Code and Qwen, by simply typing: qwen!

Claude Code

In addition to Qwen Code, you can now use Qwen3‑Coder with Claude Code. Simply request an API key on Alibaba Cloud Model Studio platform and install Claude Code to start coding.

npm install -g @anthropic-ai/claude-code

We have provided two entrypoints for seamlessly experiencing coding with Qwen3-Coder.

Optional 1: Claude Code proxy API

export ANTHROPIC_BASE_URL=https://dashscope-intl.aliyuncs.com/api/v2/apps/claude-code-proxy

export ANTHROPIC_AUTH_TOKEN=your-dashscope-apikey

Then you should be able to use Claude Code with Qwen3-Coder!

Optional 2: claude-code-config npm package for router customization

claude-code-router aims for customizing different backend models for Claude Code. The dashscope team also provide a convenient config npm extension, namely claude-code-config, that provides default configuration for claude-code-router with DashScope support.

Run installation:

npm install -g @musistudio/claude-code-router

npm install -g @dashscope-js/claude-code-config

and then run configuration:

The command will automatically generate the config json files and plugin directories for ccr. (You could also manually adjust these under ~/.claude-code-router/config.json and ~/.claude-code-router/plugins/ )

Start using claude code via ccr:

Cline

Configure the Qwen3-Coder-480B-A35B-Instruct to cline

‒ Go to the Cline configuration settings

‒ For API Provider, select ‘OpenAI Compatible’

‒ For the OpenAI Compatible API Key, enter the key obtained from Dashscope

‒ Check ‘Use custom base URL’ and enter: https://dashscope-intl.aliyuncs.com/compatible-mode/v1

‒ Enter qwen3-coder-plus

Use Cases

API

You can directly access the API of Qwen3-Coder through Alibaba Cloud Model Studio. Here is a demonstration of how to use this model with the Qwen API.

import os

from openai import OpenAI

# Create client - using intl URL for users outside of China

# If you are in mainland China, use the following URL:

# "https://dashscope.aliyuncs.com/compatible-mode/v1"

client = OpenAI(

api_key=os.getenv("DASHSCOPE_API_KEY"),

base_url="https://dashscope-intl.aliyuncs.com/compatible-mode/v1",

)

prompt = "Help me create a web page for an online bookstore."

# Send request to qwen3-coder-plus model

completion = client.chat.completions.create(

model="qwen3-coder-plus",

messages=[

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": prompt}

],

)

# Print the response

print(completion.choices[0].message.content.strip())

Further Work

We are still actively working to improve the performance of our Coding Agent, aiming for it to take on more complex and tedious tasks in software engineering, thereby freeing up human productivity. More model sizes of Qwen3-Coder are on the way, delivering strong performance while reducing deployment costs. Additionally, we are actively exploring whether the Coding Agent can achieve self-improvement—an exciting and inspiring direction.

Keep your files stored safely and securely with the SanDisk 2TB Extreme Portable SSD. With over 69,505 ratings and an impressive 4.6 out of 5 stars, this product has been purchased over 8K+ times in the past month. At only $129.99, this Amazon’s Choice product is a must-have for secure file storage.

Help keep private content private with the included password protection featuring 256-bit AES hardware encryption. Order now for just $129.99 on Amazon!

Help Power Techcratic’s Future – Scan To Support

If Techcratic’s content and insights have helped you, consider giving back by supporting the platform with crypto. Every contribution makes a difference, whether it’s for high-quality content, server maintenance, or future updates. Techcratic is constantly evolving, and your support helps drive that progress.

As a solo operator who wears all the hats, creating content, managing the tech, and running the site, your support allows me to stay focused on delivering valuable resources. Your support keeps everything running smoothly and enables me to continue creating the content you love. I’m deeply grateful for your support, it truly means the world to me! Thank you!

|

BITCOIN

bc1qlszw7elx2qahjwvaryh0tkgg8y68enw30gpvge Scan the QR code with your crypto wallet app |

|

DOGECOIN

D64GwvvYQxFXYyan3oQCrmWfidf6T3JpBA Scan the QR code with your crypto wallet app |

|

ETHEREUM

0xe9BC980DF3d985730dA827996B43E4A62CCBAA7a Scan the QR code with your crypto wallet app |

Please read the Privacy and Security Disclaimer on how Techcratic handles your support.

Disclaimer: As an Amazon Associate, Techcratic may earn from qualifying purchases.