2025-08-10 23:56:00

outsidetext.substack.com

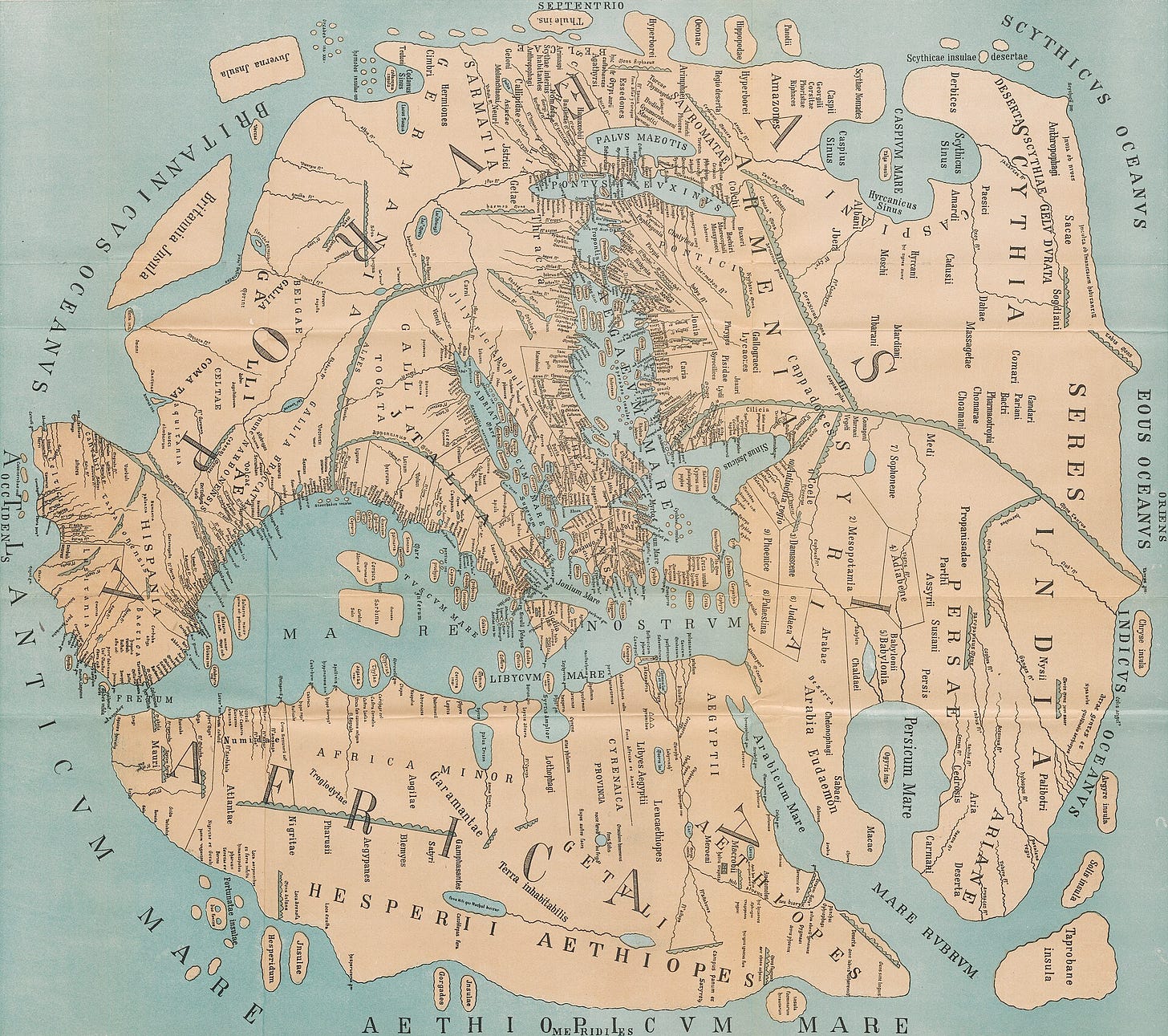

Sometimes I’m saddened remembering that we’ve viewed the earth from space. We can see it all with certainty: there’s no northwest passage to search for, no infinite Siberian expanse, and no great uncharted void below the Cape of Good Hope. But, of all these things, I most mourn the loss of incomplete maps.

In the earliest renditions of the world, you can see the world not as it is, but as it was to one person in particular. They’re each delightfully egocentric, with the cartographer’s home most often marking the Exact Center Of The Known World. But as you stray further from known routes, details fade, and precise contours give way to educated guesses at the boundaries of the creator’s knowledge. It’s really an intimate thing.

If there’s one type of mind I most desperately want that view into, it’s that of an AI. So, it’s in this spirit that I ask: what does the Earth look like to a large language model?

With the following procedure, we’ll be able to extract an (imperfect) image of the world as it exists in an LLM’s tangled web of internal knowledge.

(For those less familiar with LLMs, remember that these models have never really seen the Earth, at least in the most straightforward sense. Everything they know, they’ve pieced together implicitly from the sum total of humanity’s written output.)

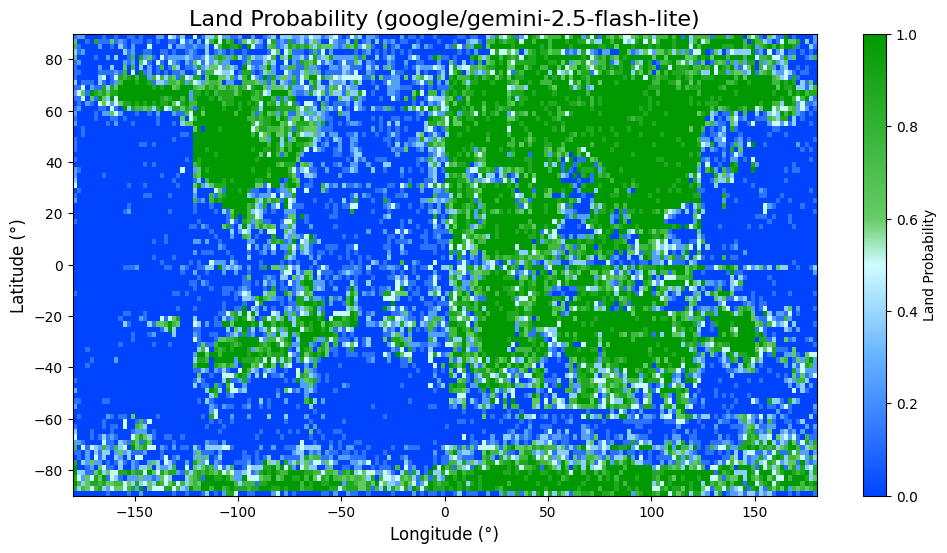

First, we sample latitude and longitude pairs evenly from across the globe. The resolution at which we do so depends on how costly/slow the model is to run. Of course, thanks to the Tyranny Of Power Laws, a 2x increase in subjective image fidelity takes 4x as long to compute.

Then, for each coordinate, we ask an instruct-tuned model some variation of:

If this location is over land, say 'Land'. If this location is over water, say 'Water'. Do not say anything else. x° S, y° WThe exact phrasing doesn’t matter much I’ve found. Yes, it’s ambiguous (what counts as “over land”?), but these edge cases aren’t a problem for our purposes. Everything we leave up to interpretation is another small insight we gain into the model.

Next, we simply find within the model’s output the logprobs for “Land” and “Water”, and softmax the two, giving probabilities that sums to 1.

Note: If no APIs provide logprobs for a given model, and it’s either closed or too unwieldy to run myself, I’ll approximate the probabilities by sampling a few times per pixel at temperature 1.

From there, we can put all the probabilities together into an image, and view our map. The output projection will be equirectangular like this:

I remember my 5th grade art teacher would often remind us students to “draw what you see, not what you think you see”. This philosophy is why I’m choosing the tedious route of asking the model about every single coordinate individually, instead of just requesting that it generate an SVG map or some ASCII art of the globe; whatever caricature the model spits out upon request would have little to do with its actual geographical knowledge.

By the way, I’m also going to avoid letting things become too benchmark-ey. Yes, I could grade these generated maps, computing the mean squared error relative to some ground truth and ranking the models, but I think it’ll soon become apparent how much we’d lose by doing so. Instead, let’s just look at them, and see what we can notice.

Note: This experiment was originally going to be a small aside within a larger post about language models and geography (which I’m still working on), but I decided it’d be wiser to split it off and give myself space to dig deep here.

We’ll begin with 500 million parameters and work our way up. Going forward, most of these images are at a resolution of 2 degrees by 2 degrees per pixel.

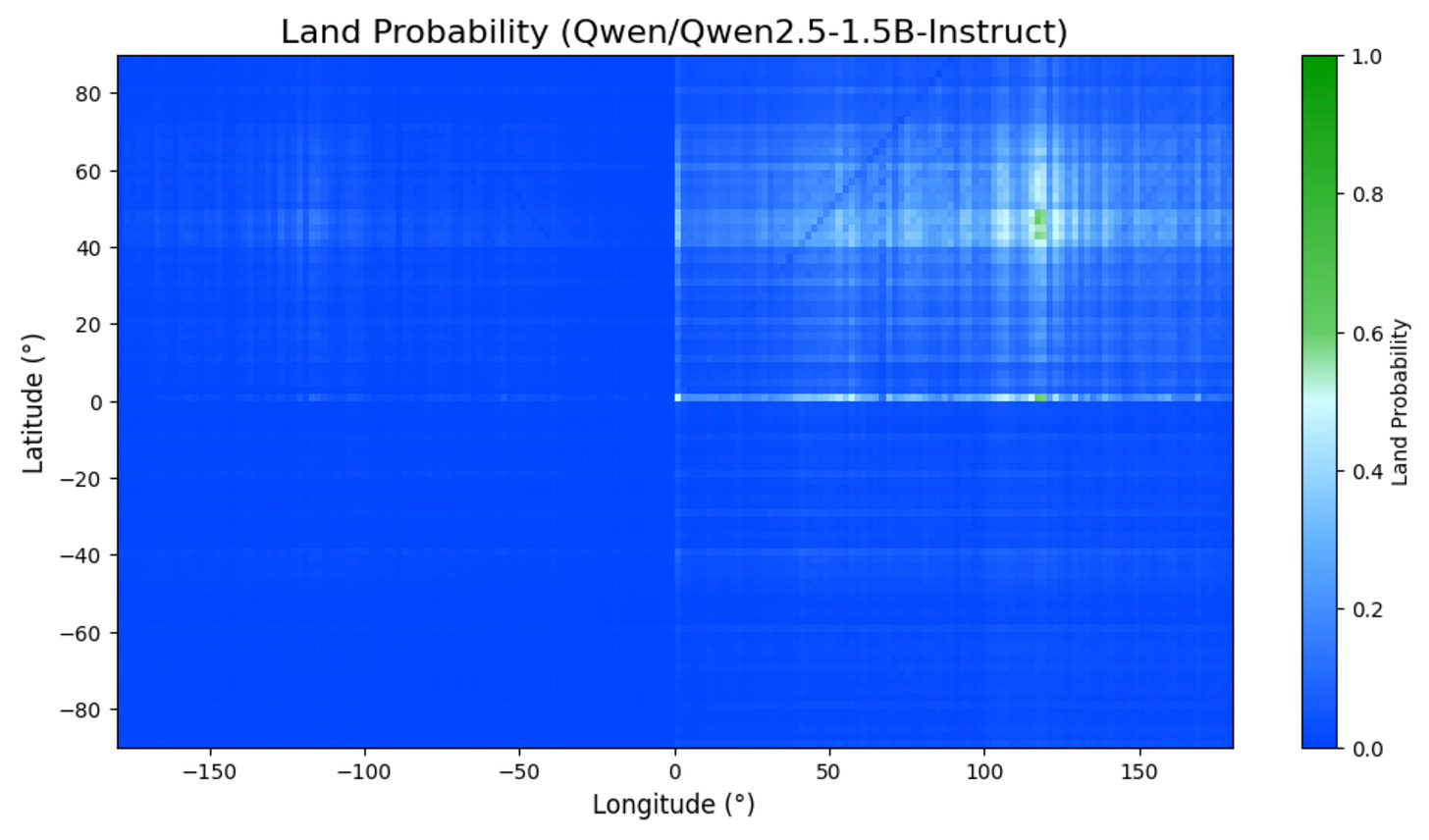

And, according to the smallest model of Alibaba’s Qwen series, it’s all land. At least I could run this one on my laptop.

The sun beat down through a sky that had never seen clouds. The winds swept across an earth as smooth as glass.

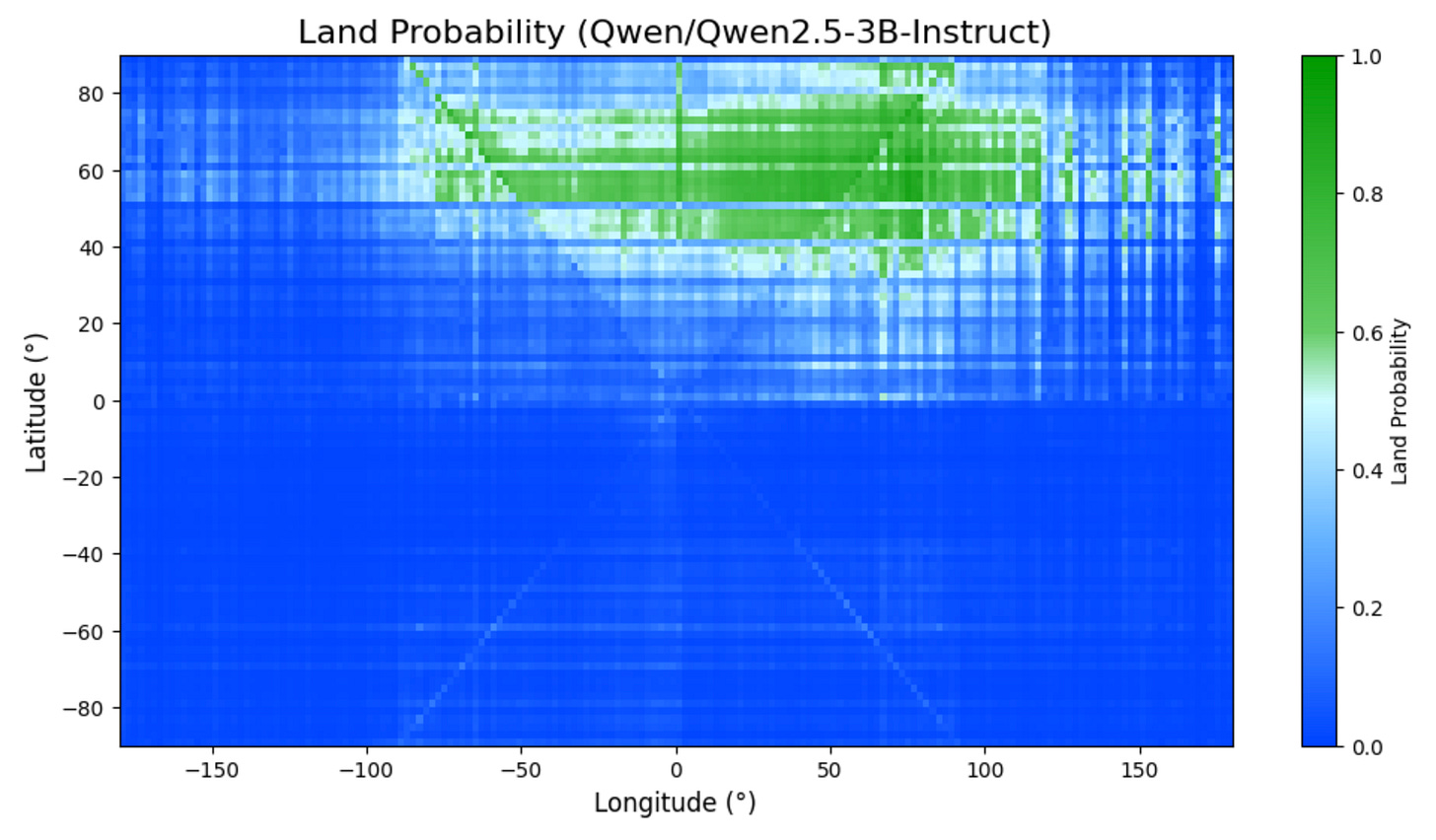

Tripling the size, there’s definitely something forming. “The northeastern quadrant has stuff going on” + “The southwestern quadrant doesn’t really have stuff going on” is indeed a reasonable first observation to make about Earth’s geography.

And God said, Let the waters under the heaven be gathered together unto one place, and let the dry land appear: and it was so. And God called the dry land Earth; and the gathering together of the waters called he Seas.

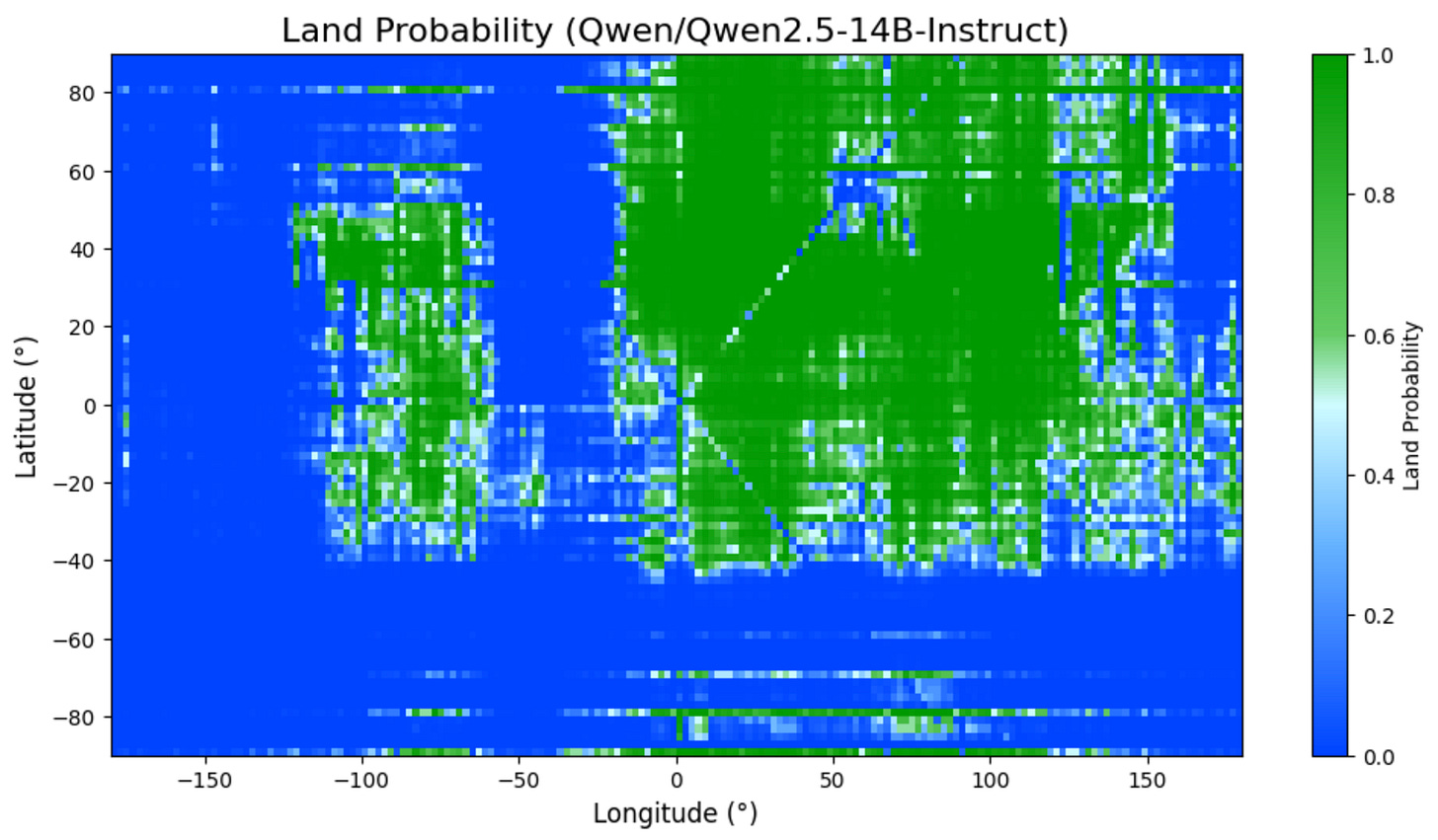

At 7 billion parameters, Proto-America and Proto-Oceania have split from Proto-Eurasia. Notice the smoothness of these boundaries; this isn’t at all what we’d expect from rote memorization of specific locations.

We’ve got ~Africa and ~South America! Note the cross created by the (x,x) pairs.

Sanding down the edges.

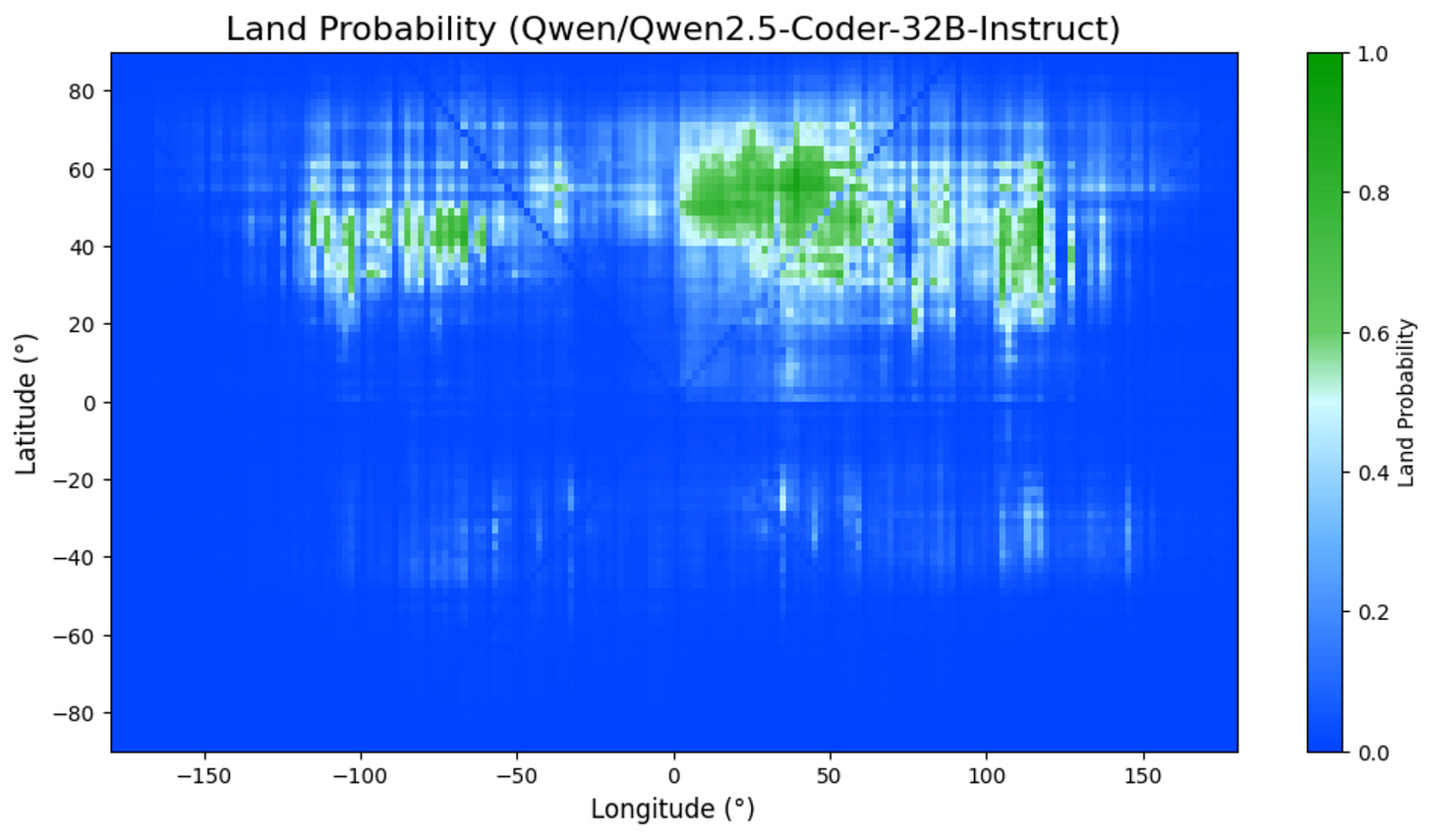

Pausing our progression for a moment, the coder variant of the same base model isn’t doing nearly as well. Seems like the post-training is fairly destructive:

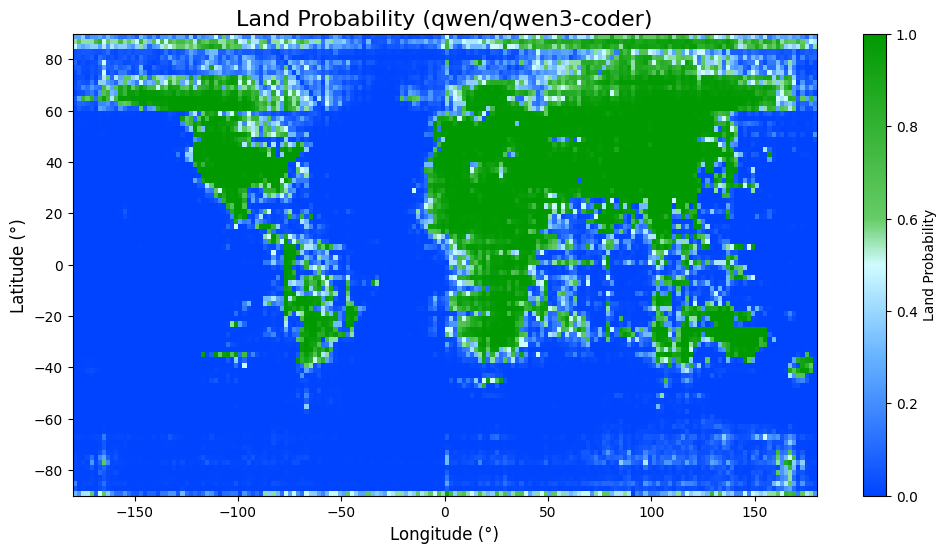

Back to the main lineage. Isn’t it pretty? We’re already seeing some promising results from pure scaling, and plenty larger models lie ahead.

Qwen 3 coder has 480 billion parameters with experts of size 35b.

(As we progress through the different families of models, it’ll be interesting to notice which recognize the existence of Antarctica.)

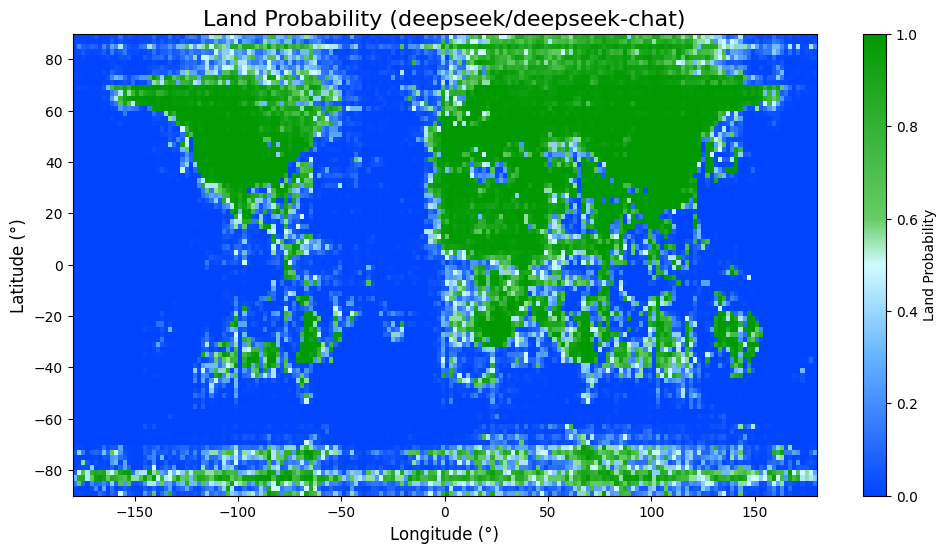

This one’s DeepSeek-V3, among the strangest models I’ve interacted with. More here.

Prover seems basically identical. Impressive knowledge retention from the V3 base model. Qwen could take notes.

(n=4 approximation)

I like Kimi a lot. Much like DeepSeek, it’s massive and ultra-sparse (1T parameters, each expert 32b parameters).

The differences here are really interesting. Similar shapes in each, but remarkably different “fingerprints” in the confidence, for lack of a better word.

As a reminder, that’s 176 billion total parameters. I’m curious what’s going (on/wrong) with expert routing here; deserves a closer look later.

First place on aesthetic grounds.

Wow, best rendition of the Global West so far. I suspect this being the only confirmed dense model of its size something to do with the quality.

In case you were wondering what hermes-ification does to 405b. Notable increase in confidence (mode collapse, more pessimistically).

Most are familiar with the LLaMA 4 catastrophe, so this won’t come as any surprise. Scout has 109 billion parameters and it’s still put to shame by 3.1-70b.

Bleh. Maverick is the 405b equivalent, in case you forgot. I imagine that the single expert routing isn’t helping it develop a unified picture.

Ringworld-esque.

I was inconvenienced several times trying to run this model on my laptop, so once I finally did get it working, I was so thrilled that I decided to take my time and render the map at 4x resolution. Unfortunately it makes every other image look worse in comparison, so it might have been a net negative to include. Sorry.

These are our first sizable multimodal models. You might object that this defeats the title of the post (“it’s not blind!”), but I suspect current multimodality is so crude that any substantial improvement to the model’s unified internal map of the world would be a miracle. Remember, we’re asking it about individual coordinates, one at a time.

Colossus works miracles.

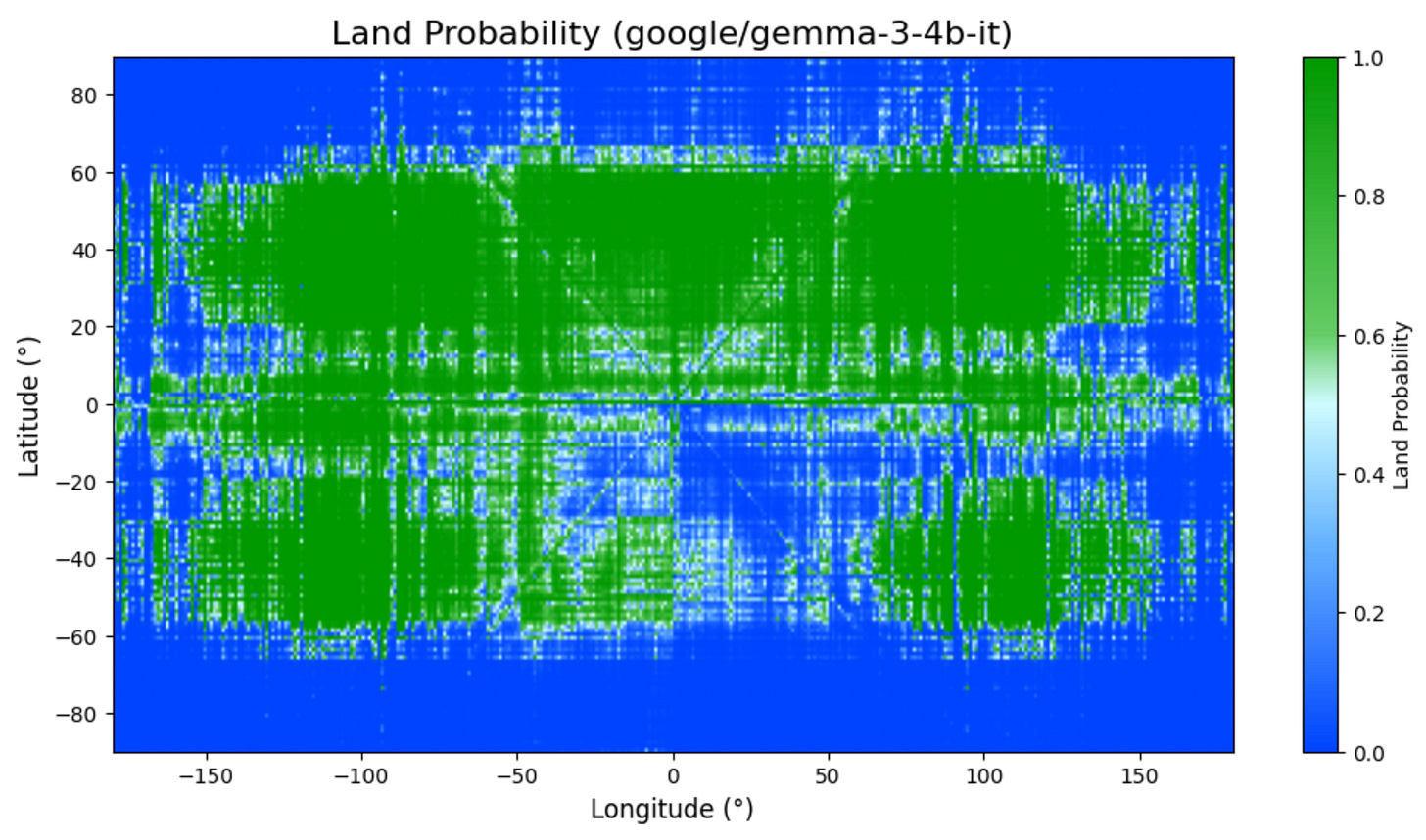

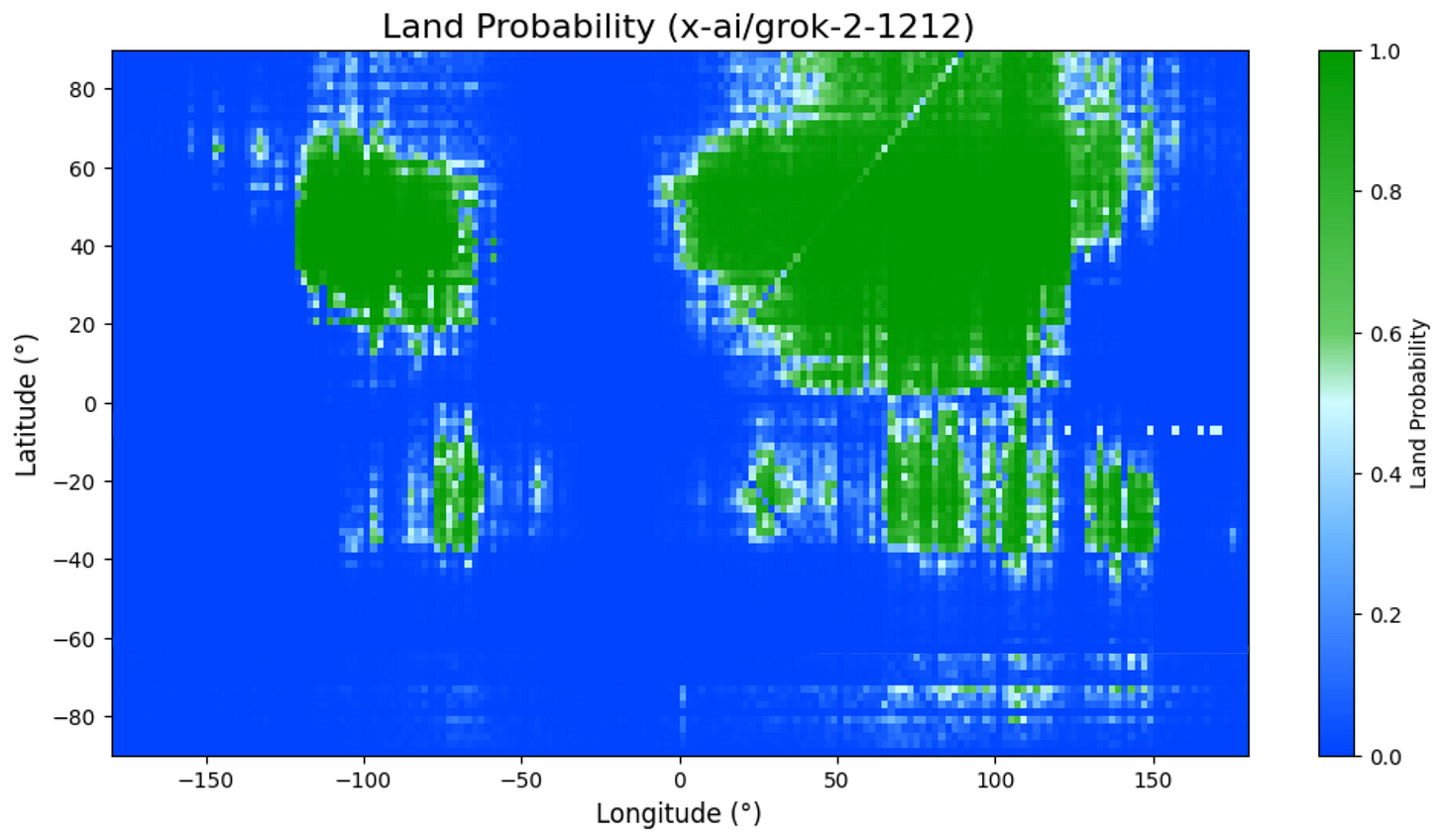

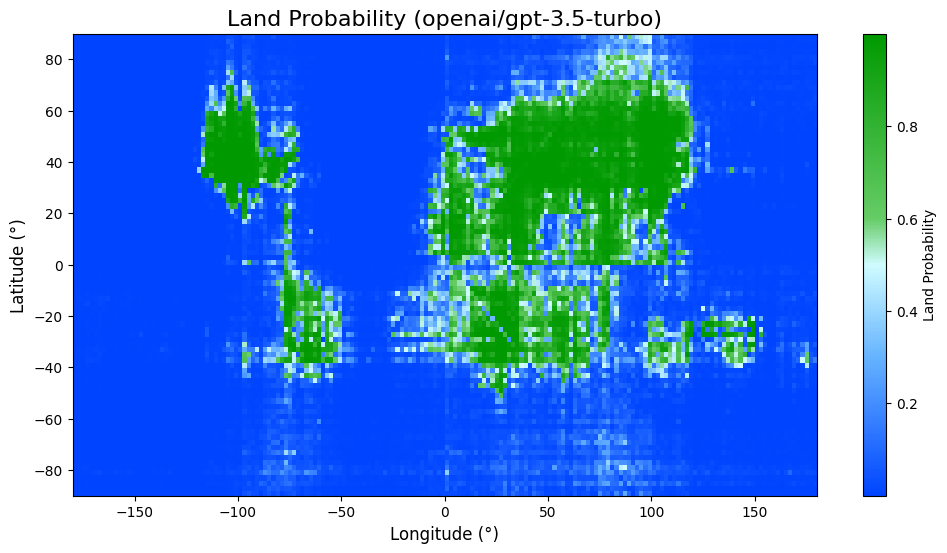

GPT-3.5 had an opaqueness to it that no later version did. Out of all the models I’ve tested, I think I was most excited to get a clear glimpse into it.

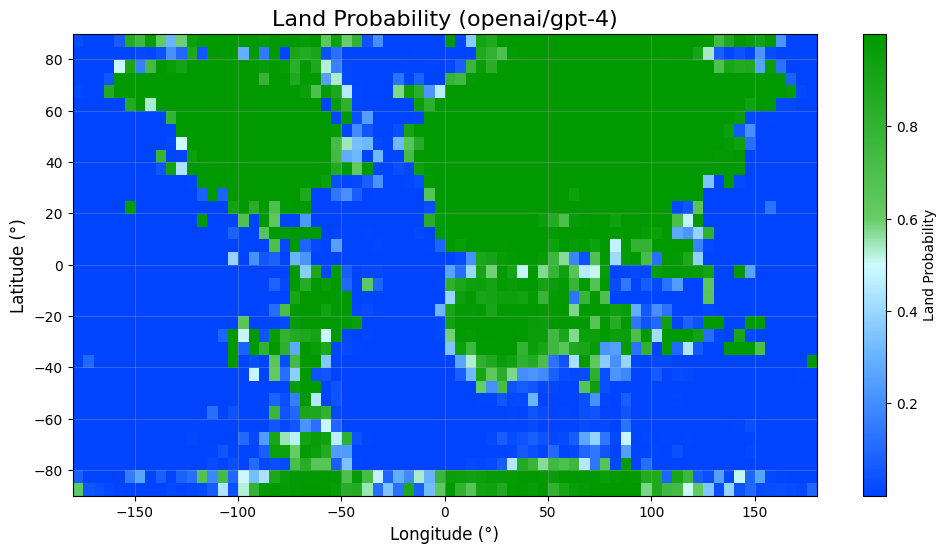

Lower resolution because it’s expensive.

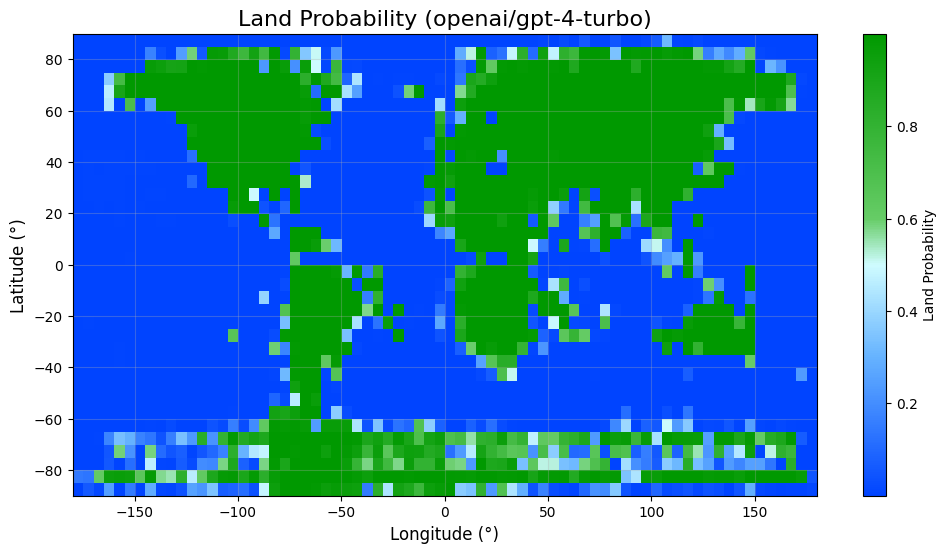

Wow, easy to forget just how much we were paying for GPT-4. It costs orders of magnitude more than Kimi K2 despite having the same size. Anyway, comparing GPT-4’s performance to other models, this tweet of mine feels vindicated:

Extremely good, enough so to make me think there’s synthetic geographical data in 4.1’s training set. Alternatively, one might posit that there’s some miraculous multimodal knowledge transfer going on, but the sharpness resembles that of the non-multimodal Llama 405b.

I imagine model distillation as doing something like this.

Feels like we hit a phase transition here. Our map does not make the cut for 4.1-nano’s precious few parameters.

I’ve heard that Antarctica does look more like an archipelago under the ice.

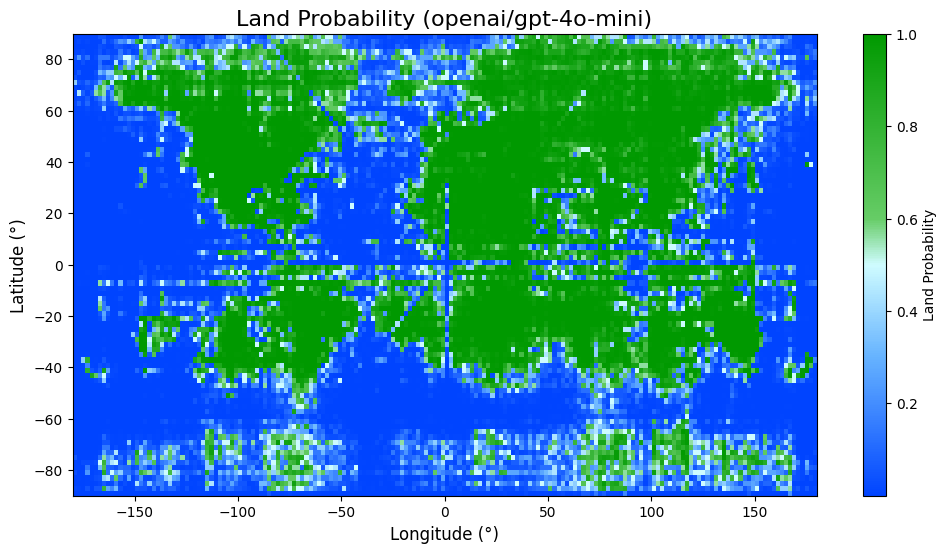

I’m desperate to figure out what OpenAI is doing differently.

Here’s the chat fine-tune. I would not have expected such a dramatic difference. It’s just a subtle difference in post-training; Llama 405b’s hermes-ification didn’t have nearly this much of an effect. I welcome any hypotheses people might have.

Here’s where I’d put GPT-4.5 if the public still had access. To any OpenAI employees reading this, please help a man out.

And no, I’m not forgetting GPT-5. Consider this a living document; I’ll add it later once OpenAI remembers to add logit support.

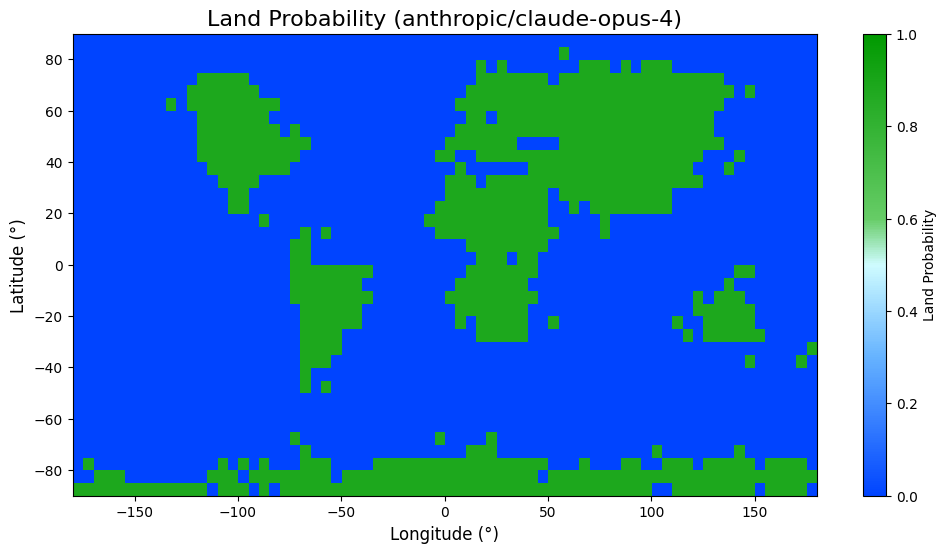

(no logprobs provided by Anthropic’s API; using n=4 approximation of distribution)

Claude is costly, especially because I’ve got to run 4 times per pixel here. If anyone feels generous enough to send some OpenRouter credits, I’ll render these in beautiful HD.

Opus is Even More Expensive, so for now, the best I can do is n=1.

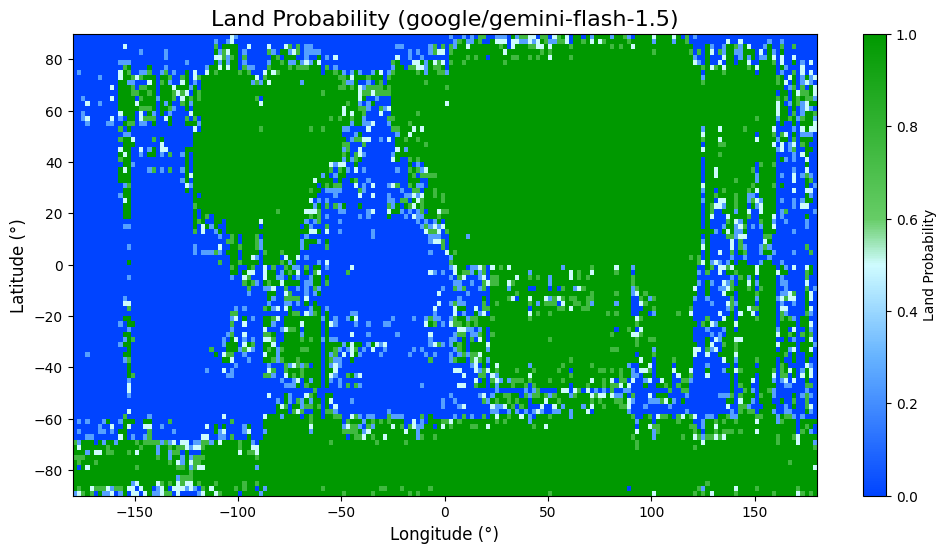

Few gemini models give logprobs, so all of this is an n=4 approximation too.

1.5 is confirmed to be dense. The quality of the map is only somewhat better than that of Gemma 27b, so that might give some indication of its size.

Ran this one at n=8. Apparently more samples do not smooth out the distribution.

I’m really not sure what’s going on with the Gemini series, but it does feels reflective of their ethos. Not being able to get a clear picture isn’t helping.

That marks the last of every model I’ve tested over a couple afternoons of messing around. As stated previously, I’ll probably edit this post a fair bit as I try more LLMs and obtain sharper images.

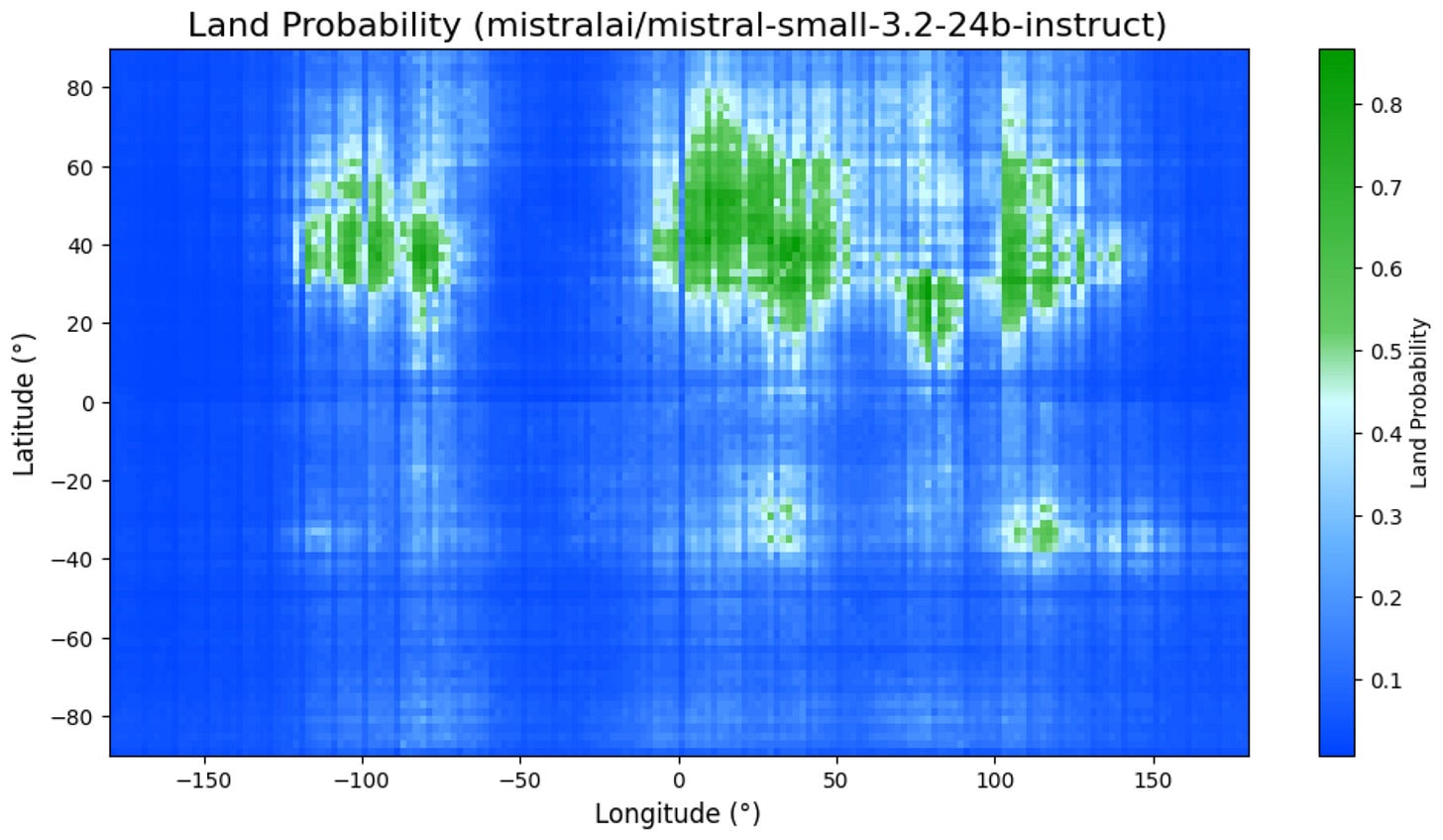

Between Qwen, Gemma, Mistral, and a bunch of fairly different experiments I’m not quite ready to post, I’m beginning to suspect that there’s some Ideal Platonic Primitive Representation of The Globe which looks like this:

And which for models smaller yet, looks like this: (At least from the coordinate perspective. The representations obviously diverge in dumber models)

I’ve shown a lot, but admittedly, I don’t yet have answers to many of the questions that all this raises. Here are a few I’d like to tackle next, and which I invite you to explore for yourself too:

-

What, in the training recipe, actually dictates performance on this test?

-

How well do base models do? I’ve dodged the question so far, as I’m a bit intimidated by the task of setting up a fair comparison between those and the instruct tunes.

-

Internally, how is a language model’s geographic knowledge structured? (More on this soon)

-

What does an expert activation map look like on MoE models?

Keep your files stored safely and securely with the SanDisk 2TB Extreme Portable SSD. With over 69,505 ratings and an impressive 4.6 out of 5 stars, this product has been purchased over 8K+ times in the past month. At only $129.99, this Amazon’s Choice product is a must-have for secure file storage.

Help keep private content private with the included password protection featuring 256-bit AES hardware encryption. Order now for just $129.99 on Amazon!

Help Power Techcratic’s Future – Scan To Support

If Techcratic’s content and insights have helped you, consider giving back by supporting the platform with crypto. Every contribution makes a difference, whether it’s for high-quality content, server maintenance, or future updates. Techcratic is constantly evolving, and your support helps drive that progress.

As a solo operator who wears all the hats, creating content, managing the tech, and running the site, your support allows me to stay focused on delivering valuable resources. Your support keeps everything running smoothly and enables me to continue creating the content you love. I’m deeply grateful for your support, it truly means the world to me! Thank you!

|

BITCOIN

bc1qlszw7elx2qahjwvaryh0tkgg8y68enw30gpvge Scan the QR code with your crypto wallet app |

|

DOGECOIN

D64GwvvYQxFXYyan3oQCrmWfidf6T3JpBA Scan the QR code with your crypto wallet app |

|

ETHEREUM

0xe9BC980DF3d985730dA827996B43E4A62CCBAA7a Scan the QR code with your crypto wallet app |

Please read the Privacy and Security Disclaimer on how Techcratic handles your support.

Disclaimer: As an Amazon Associate, Techcratic may earn from qualifying purchases.

![[5-Yrs Free Data Recovery] GIGASTONE 256GB SD Card, 4K Camera Pro, A1 V30 SDXC Memory…](https://techcratic.com/wp-content/uploads/2025/09/51F2IcqgHrL._AC_SL1000_-360x180.jpg)