2025-09-11 14:55:00

www.shloked.com

Earlier this week, I dissected ChatGPT’s memory system. Since then, I’ve been doing the same for Claude and realized something remarkable: these two leading AI assistants have built completely opposite memory systems.

In this post, I’ll start by breaking down exactly how Claude’s memory works—what it stores and how it retrieves information. Then we’ll get to the interesting stuff. Why these architectures diverge so dramatically, what that tells us about who uses each assistant and the philosophies driving each product’s development, and just how vast the AI memory design space really is.

How it works

Claude’s memory system has two fundamental characteristics. First, it starts every conversation with a blank slate, without any preloaded user profiles or conversation history. Memory only activates when you explicitly invoke it. Second, Claude recalls by only referring to your raw conversation history. There are no AI-generated summaries or compressed profiles—just real-time searches through your actual past chats.

When Claude detects memory invocation through phrases like “what did we discuss about,” “continue where we left off,” or “remember when we talked about,” it deploys two retrieval tools that work like web search or code execution—you see them activate in real-time and wait while Claude searches through your history. Once the search completes, Claude synthesizes the retrieved conversations to answer your question or continue the discussion.

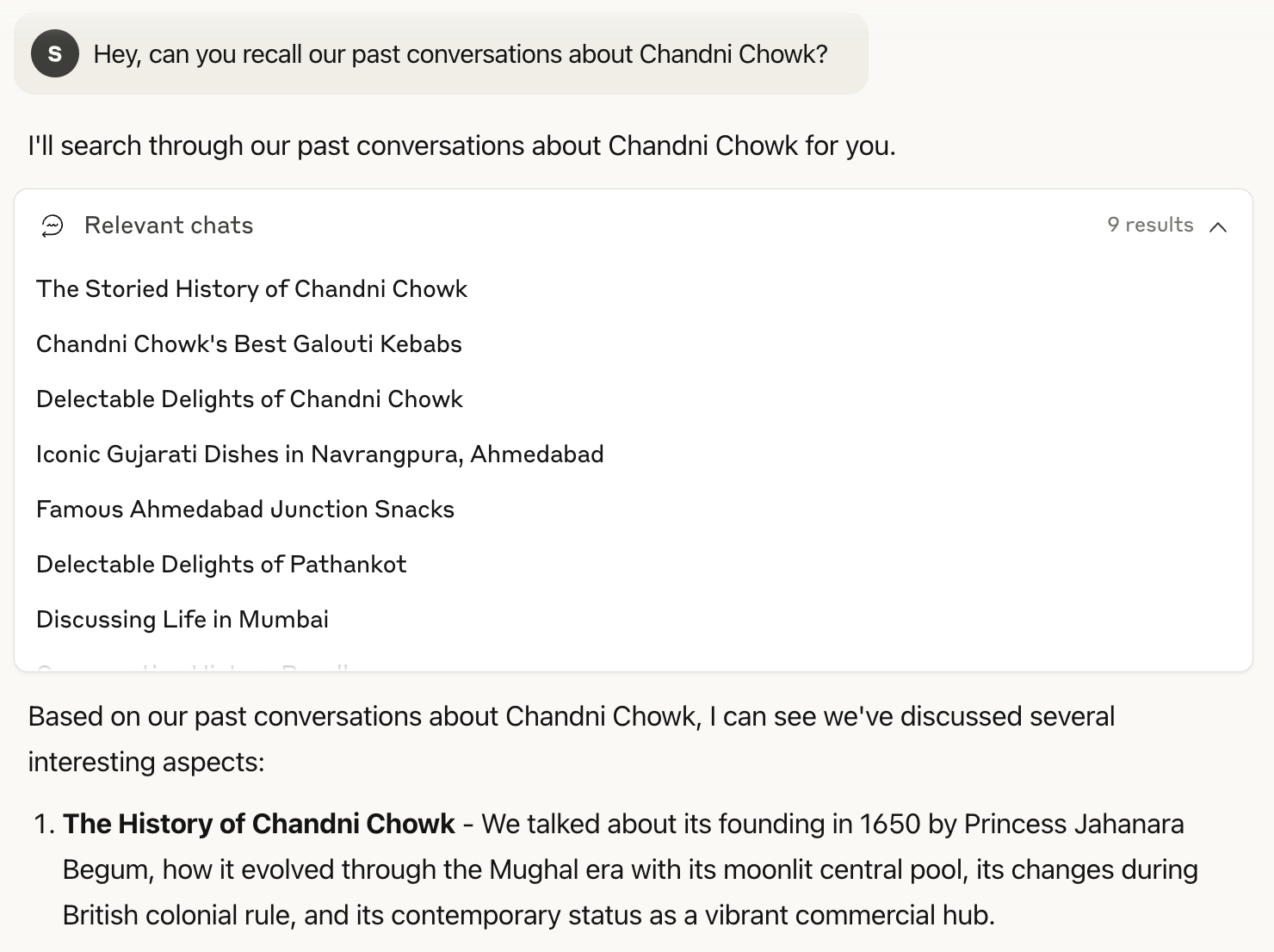

Conversation Search

The conversation_search tool helps with keyword and topic-based searches across your entire conversation history. When I asked “Hey, can you recall our past conversations about Chandni Chowk?” (a historic neighborhood in Delhi), Claude found 9 relevant conversations—from when I explored its founding by Princess Jahanara Begum in 1650 to my queries about the best galouti kebabs at Karim’s and stuffed parathas at Paranthe Wali Gali. Claude synthesized these scattered discussions into a coherent summary of my Chandni Chowk explorations.

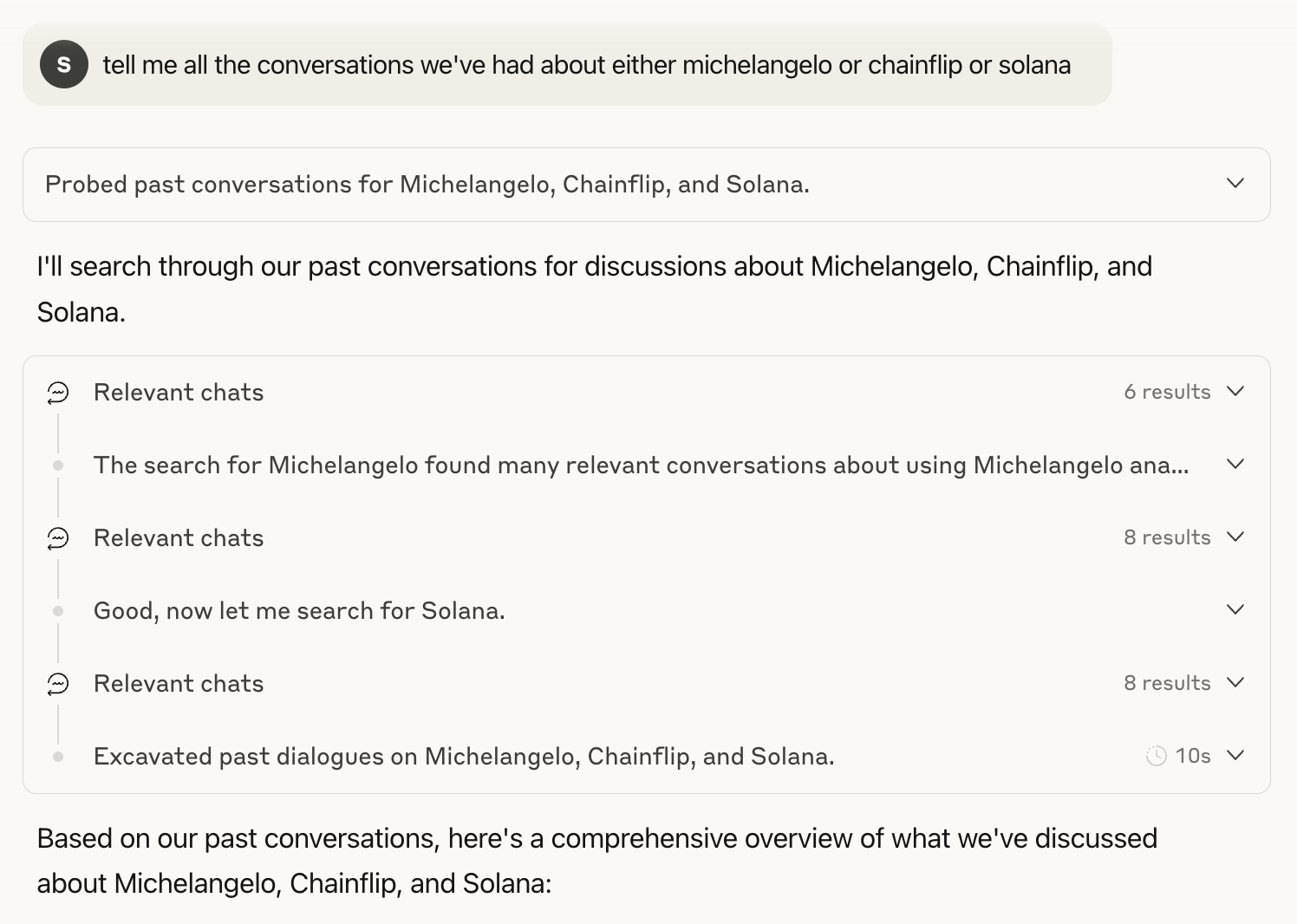

When you ask about multiple topics, Claude runs separate searches sequentially. In my past job as a crypto researcher, I used Claude extensively as an editor. When I asked “Tell me all the conversations we’ve had about either Michelangelo or Chainflip or Solana,” Claude ran three separate searches—one for my Michelangelo analogies for neural networks, another for Chainflip’s cross-chain protocol work, and a third for Solana’s technical architecture. It found 22 conversations across these searches and delivered a unified response with direct links to each chat.

{

"description": "Search through past user conversations to find relevant context and information",

"name": "conversation_search",

"parameters": {

"properties": {

"max_results": {

"default": 5,

"description": "The number of results to return, between 1-10",

"exclusiveMinimum": 0,

"maximum": 10,

"title": "Max Results",

"type": "integer"

},

"query": {

"description": "The keywords to search with",

"title": "Query",

"type": "string"

}

},

"required": ["query"],

"title": "ConversationSearchInput",

"type": "object"

}

}

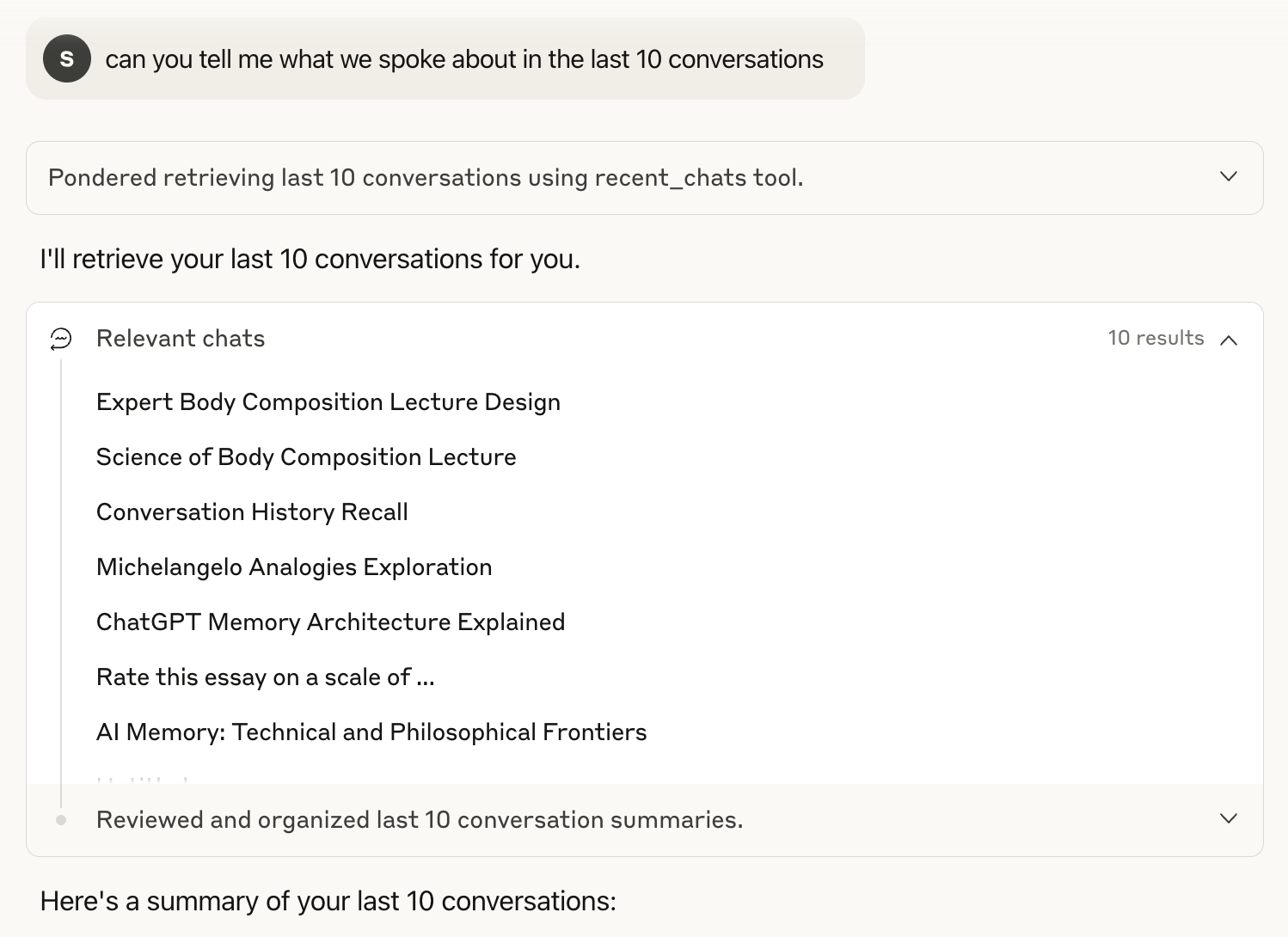

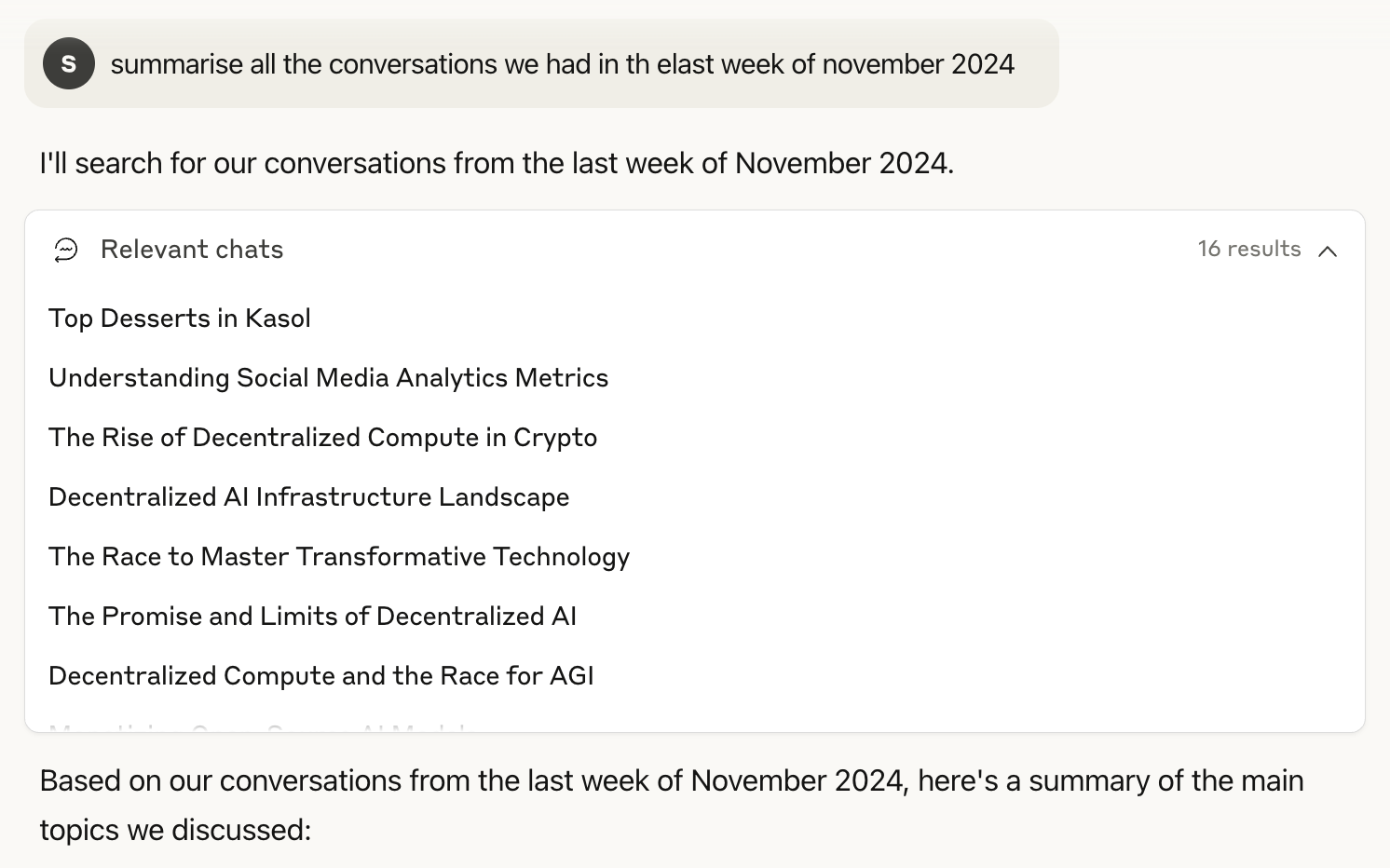

Temporal Chat Retrieval

The recent_chats tool provides time-based access to your conversation history. When I asked “Can you tell me what we spoke about in the last 10 conversations?” Claude retrieved my most recent chats chronologically and gave me a summary of my recent usage.

The tool also handles specific timeframes. When I asked “What did we discuss in the last week of November 2024?” Claude retrieved 16 conversations from that exact period.

{

"description": "Retrieve recent chat conversations with customizable sort order (chronological or reverse chronological), optional pagination using 'before' and 'after' datetime filters, and project filtering",

"name": "recent_chats",

"parameters": {

"properties": {

"after": {

"anyOf": [{"format": "date-time", "type": "string"}, {"type": "null"}],

"default": null,

"description": "Return chats updated after this datetime (ISO format, for cursor-based pagination)",

"title": "After"

},

"before": {

"anyOf": [{"format": "date-time", "type": "string"}, {"type": "null"}],

"default": null,

"description": "Return chats updated before this datetime (ISO format, for cursor-based pagination)",

"title": "Before"

},

"n": {

"default": 3,

"description": "The number of recent chats to return, between 1-20",

"exclusiveMinimum": 0,

"maximum": 20,

"title": "N",

"type": "integer"

},

"sort_order": {

"default": "desc",

"description": "Sort order for results: 'asc' for chronological, 'desc' for reverse chronological (default)",

"pattern": "^(asc|desc)$",

"title": "Sort Order",

"type": "string"

}

},

"title": "GetRecentChatsInput",

"type": "object"

}

}

ChatGPT vs Claude

A year ago, ChatGPT and Claude’s assistant apps matched each other feature for feature—multiple models, file attachments, projects. Since then, their paths have diverged dramatically. ChatGPT has evolved into a mass-market consumer product, while Claude has deliberately chosen a different trajectory. Anthropic CPO Mike Krieger has acknowledged that OpenAI had “caught lightning in a bottle” with consumer adoption. Instead of chasing that market, Anthropic is focusing on what Claude does best: developer tools, coding, and professional workflows.

Memory implementation perfectly reflects this divergence.

ChatGPT’s hundreds of millions of weekly active users come from all backgrounds—students, parents, hobbyists—who just want a product that works and remembers them without thinking about the mechanics. Every memory component loads automatically, creating instant personalization with zero wait time. The system builds detailed user profiles, learning preferences and patterns that could eventually power targeted features or monetization. It’s the classic consumer tech playbook: make it magical, make it sticky, figure out different ways to monetize later.

Claude’s users represent a different demographic entirely. Anthropic’s more technical users inherently understand how LLMs work. They’re comfortable with explicit control at every level. Just as they choose when to trigger web search or enable extended thinking, they decide when memory is worth invoking. They understand that memory calls add latency, but they make that tradeoff deliberately. Memory becomes just another tool in their arsenal, not an always-on feature. This audience doesn’t need or want extensive profiling—they need a powerful, predictable tool for professional work. Not to mention, they’re also more privacy-conscious.

The Memory Design Space

It still amazes me that ChatGPT and Claude—the two top AI assistants—have built completely opposite memory systems. This only goes to show that memory in AI has a massive design space with no right answer or one-size-fits-all technique. You have to work backwards from who your users are and what they need, then build from first principles accordingly.

We’re in uncharted territory. These tools are less than three years old, and nobody knows what happens when someone uses the same AI assistant for a decade. How much should it remember? How should it handle years of accumulated context? Meanwhile, we’re seeing a Cambrian explosion of AI apps, each experimenting with their own memory approach, while the underlying models get more powerful every week. There’s no playbook, no settled best practices—just everyone trying different things and seeing what sticks.

The more I dive into memory, the more fascinated I get. Over the coming weeks, I’ll be dissecting different architectures, analyzing new approaches, and following the latest research. Subscribe below if you want updates as this space unfolds.

Keep your files stored safely and securely with the SanDisk 2TB Extreme Portable SSD. With over 69,505 ratings and an impressive 4.6 out of 5 stars, this product has been purchased over 8K+ times in the past month. At only $129.99, this Amazon’s Choice product is a must-have for secure file storage.

Help keep private content private with the included password protection featuring 256-bit AES hardware encryption. Order now for just $129.99 on Amazon!

Help Power Techcratic’s Future – Scan To Support

If Techcratic’s content and insights have helped you, consider giving back by supporting the platform with crypto. Every contribution makes a difference, whether it’s for high-quality content, server maintenance, or future updates. Techcratic is constantly evolving, and your support helps drive that progress.

As a solo operator who wears all the hats, creating content, managing the tech, and running the site, your support allows me to stay focused on delivering valuable resources. Your support keeps everything running smoothly and enables me to continue creating the content you love. I’m deeply grateful for your support, it truly means the world to me! Thank you!

|

BITCOIN

bc1qlszw7elx2qahjwvaryh0tkgg8y68enw30gpvge Scan the QR code with your crypto wallet app |

|

DOGECOIN

D64GwvvYQxFXYyan3oQCrmWfidf6T3JpBA Scan the QR code with your crypto wallet app |

|

ETHEREUM

0xe9BC980DF3d985730dA827996B43E4A62CCBAA7a Scan the QR code with your crypto wallet app |

Please read the Privacy and Security Disclaimer on how Techcratic handles your support.

Disclaimer: As an Amazon Associate, Techcratic may earn from qualifying purchases.