2025-01-07 18:20:00

slack.engineering

At Slack, customer love is our first priority and accessibility is a core tenet of customer trust. We have our own Slack Accessibility Standards that product teams follow to guarantee their features are compliant with Web Content Accessibility Guidelines (WCAG). Our dedicated accessibility team supports developers in following these guidelines throughout the development process. We also frequently collaborate with external manual testers that specialize in accessibility.

In 2022, we started to supplement Slack’s accessibility strategy by setting up automated accessibility tests for desktop to catch a subset of accessibility violations throughout the development process. At Slack, we see automated accessibility testing as a valuable addition to our broader testing strategy. This broader strategy also includes involving people with disabilities early in the design process, conducting design and prototype review with these users, and performing manual testing across all of the assistive technologies we support. Automated tools can overlook nuanced accessibility issues that require human judgment, such as screen reader usability. Additionally, these tools can also flag issues that don’t align with the product’s specific design considerations.

Despite that, we still felt there would be value in integrating an accessibility testing tool into our test frameworks as part of the overall, comprehensive testing strategy. Ideally, we were hoping to add another layer of support by integrating the accessibility validation directly into our existing frameworks so test owners could easily add checks, or better yet, not have to think about adding checks at all.

Exploration and Limitations

Unexpected Complexities: Axe, Jest, and React Testing Library (RTL)

We chose to work with Axe, a popular and easily configurable accessibility testing tool, for its extensive capabilities and compatibility with our current end-to-end (E2E) test frameworks. Axe checks against a wide variety of accessibility guidelines, most of which correspond to specific success criteria from WCAG, and it does so in a way that minimizes false positives.

Initially we explored the possibility of embedding Axe accessibility checks directly into our React Testing Library (RTL) framework. By wrapping RTL’s render method with a custom render function that included the Axe check, we could remove a lot of friction from the developer workflow. However, we immediately encountered an issue related to the way we’ve customized our Jest set up at Slack. Running accessibility checks through a separate Jest configuration worked, but would require developers to write tests specifically for accessibility, which we wanted to avoid. Reworking our custom Jest setup was deemed too tricky and not worth the time and resource investment, so we pivoted to focus on our Playwright framework.

The Best Solution for Axe Checks: Playwright

With Jest ruled out as a candidate for Axe, we turned to Playwright, the E2E test framework utilized at Slack. Playwright supports accessibility testing with Axe through the @axe-core/playwright package. Axe Core provides most of what you’ll need to filter and customize accessibility checks. It provides an exclusion method right out of the box, to prevent certain rules and selectors from being analyzed. It also comes with a set of accessibility tags to further specify the type of analysis to conduct (‘wcag2a‘, ‘wcag2aa‘, etc.).

Our initial goal was to “bake” accessibility checks directly into Playwright’s interaction methods, such as clicks and navigation, to automatically run Axe without requiring test authors to explicitly call it.

In working towards that goal, we found that the main challenge with this approach stems from Playwright’s Locator object. The Locator object is designed to simplify interaction with page elements by managing auto-waiting, loading, and ensuring the element is fully interactable before any action is performed. This automatic behavior is integral to Playwright’s ability to maintain stable tests, but it complicated our attempts to embed Axe into the framework.

Accessibility checks should run when the entire page or key components are fully rendered, but Playwright’s Locator only ensures the readiness of individual elements, not the overall page. Modifying the Locator could lead to unreliable audits because accessibility issues might go undetected if checks were run at the wrong time.

Another option, using deprecated methods like waitForElement to control when accessibility checks are triggered, was also problematic. These older methods are less optimized, causing performance degradation, potential duplication of errors, and conflicts with the abstraction model that Playwright follows.

So while embedding Axe checks into Playwright’s core interaction methods seemed ideal, the complexity of Playwright’s internal mechanisms required us to explore some further solutions.

Customizations and Workarounds

To circumvent the roadblocks we encountered with embedding accessibility checks into the frameworks, we decided to make some concessions while still prioritizing a simplified developer workflow. We continued to focus on Playwright because it offered more flexibility in how we could selectively hide or apply accessibility checks, allowing us to more easily manage when and where these checks were run. Additionally, Axe Core came with some great customization features, such as filtering rules and using specific accessibility tags.

Using the @axe-core/playwright package, we can describe the flow of our accessibility check:

- Playwright test lands on a page/view

- Axe analyzes the page

- Pre-defined exclusions are filtered out

- Violations and artifacts are saved to a file

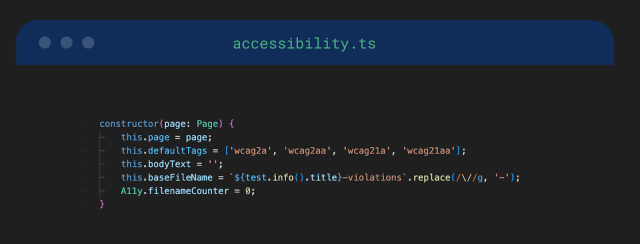

First, we set up our main function, runAxeAndSaveViolations, and customized the scope using what the AxeBuilder class provides.

- We wanted to check for compliance with WCAG 2.1, Levels A and AA

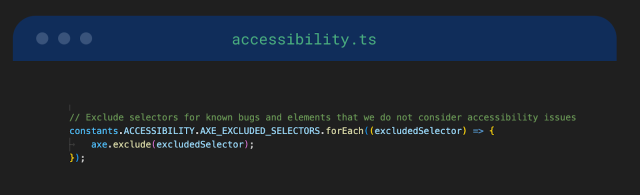

- We created a list of selectors to exclude from our violations report. These fell into two main categories:

- Known accessibility issues – issues that we are aware of and have already been ticketed

- Rules that don’t apply – Axe rules outside of the scope of how Slack is designed for accessibility

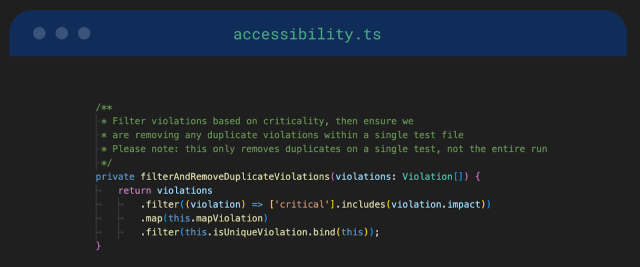

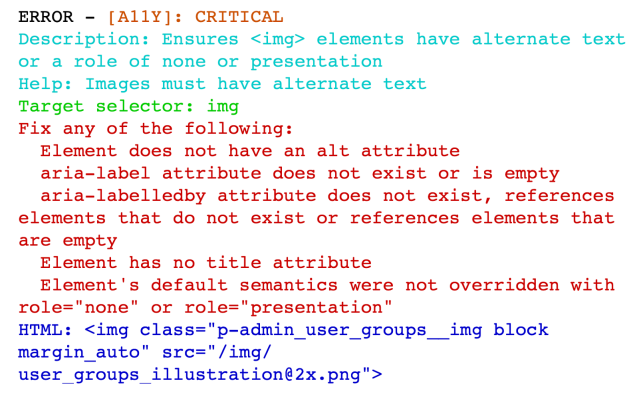

- We also wanted to filter for duplication and severity level. We created methods to check for the uniqueness of each violation and filter out duplication. We chose to report only the violations deemed

Criticalaccording to the WCAG.Serious,Moderate, andMildare other possible severity levels that we may add in the future.

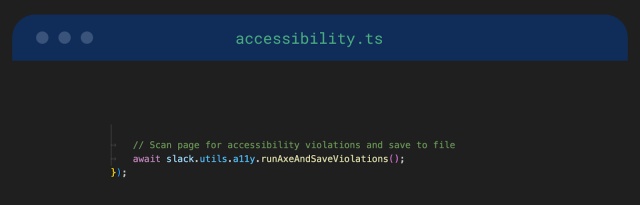

- We took advantage of the Playwright fixture model. Fixtures are Playwright’s way to build up and teardown state outside of the test itself. Within our framework we’ve created a custom fixture called

slackwhich provides access to all of our API calls, UI views, workflows and utilities related to Slack. Using this fixture, we can access all of these resources directly in our tests without having to go through the setup process every time. - We moved our accessibility helper to be part of the pre-existing

slackfixture. This allowed us to call it directly in the test spec, minimizing some of the overhead for our test authors.

- We also took advantage of the ability to customize Playwright’s

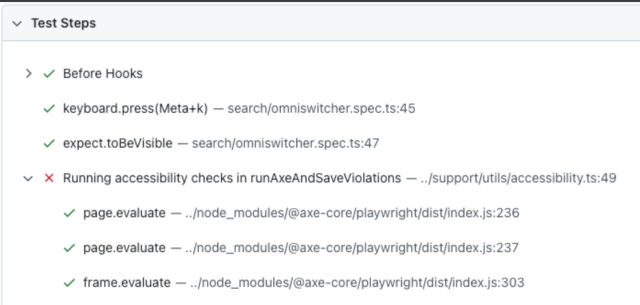

test.step. We added the custom label “Running accessibility checks inrunAxeAndSaveViolations” to make it easier to detect where an accessibility violation has occurred:

Placement of Accessibility Checks in End to End Tests

To kick the project off, we set up a test suite that mirrored our suite for testing critical functionality at Slack. We renamed the suite to make it clear it was for accessibility tests, and we set it to run as non-blocking. This meant developers would see the test results, but a failure or violation would not prevent them from merging their code to production. This initial suite encompassed 91 tests in total.

Strategically, we considered the placement of accessibility checks within these critical flow tests. In general, we aimed to add an accessibility check for each new view, page, or flow covered in the test. In most cases, this meant placing a check directly after a button click, for example, or a link that leads to navigation. In other scenarios, our accessibility check needed to be placed after signing in as a second user or after a redirect.

It was important to make sure the same view wasn’t being analyzed twice in one test, or potentially twice across multiple tests with the same UI flow. Duplication like this would result in unnecessary error messages and saved artifacts, and slow down our tests. We were also careful to place our Axe calls only after the page or view had fully loaded and all content had rendered.

With this approach, we needed to be deeply familiar with the application and the context of each test case.

Violations Reporting

We spent some time iterating on our accessibility violations report. Initially, we created a simple text file to save the results of a local run, storing it in an artifacts folder. A few developers gave us early feedback and requested screenshots of the pages where accessibility violations occurred. To achieve this, we integrated Playwright’s screenshot functionality and began saving these screenshots alongside our text report in the same artifact folder.

To make our reports more coherent and readable, we leveraged the Playwright HTML Reporter. This tool not only aggregates test results but also allows us to attach artifacts such as screenshots and violation reports to the HTML output. By configuring the HTML reporter, we were able to display all of our accessibility artifacts, including screenshots and detailed violation reports, in a single test report.

Lastly, we wanted our violation error message to be helpful and easy to understand, so we wrote some code to pull out key pieces of information from the violation. We also customized how the violations were displayed in the reports and on the console, by parsing and condensing the error message.

Environment Setup and Running Tests

Once we had integrated our Axe checks and set up our test suite, we needed to determine how and when developers should run them. To streamline the process for developers, we introduced an environment flag, A11Y_ENABLE, to control the activation of accessibility checks within our framework. By default, we set the flag to false, preventing unnecessary runs.

This setup allowed us to offer developers the following options:

- On-Demand Testing: Developers can manually enable the flag when they need to run accessibility checks locally on their branch.

- Scheduled Runs: Developers can configure periodic runs during off-peak hours. We have a daily regression run configured in Buildkite to pipe accessibility test run results into a Slack alert channel on a daily cadence.

- CI Integration: Optionally, the flag can be enabled in continuous integration pipelines for thorough testing before merging significant changes.

Triage and Ownership

Ownership of individual tests and test suites is often a hot topic when it comes to maintaining tests. Once we had added Axe calls to the critical flows in our Playwright E2E tests, we needed to decide who would be responsible for triaging accessibility issues discovered via our automation and who would own the test maintenance for existing tests.

At Slack, we enable developers to own test creation and maintenance for their tests. To support developers to better understand the framework changes and new accessibility automation, we created documentation and partnered with the internal Slack accessibility team to come up with a comprehensive triage process that would fit into their existing workflow for triaging accessibility issues.

The internal accessibility team at Slack had already established a process for triaging and labeling incoming accessibility issues, using the internal Slack Accessibility Standards as a guideline. To enhance the process, we created a new label for “automated accessibility” so we could track the issues discovered via our automation.

To make cataloging these issues easier, we set up a Jira workflow in our alerts channel that would spin up a Jira ticket with a pre-populated template. The ticket is created via the workflow and automatically labeled with “automated accessibility” and placed in a Jira Epic for triaging.

Conducting Audits

We perform regular audits of our accessibility Playwright calls to check for duplication of Axe calls, and ensure proper coverage of accessibility checks across tests and test suites.

We developed a script and an environment flag specifically to facilitate the auditing process. Audits can be performed either through sandbox test runs (ideal for suite-wide audits) or locally (for specific tests or subsets). When performing an audit, running the script allows us to take a screenshot of every page that performs an Axe call. The screenshots are then saved to a folder and can be easily compared to spot duplicates.

This process is more manual than we like, and we are looking into ways to eliminate this step, potentially leaning on AI assistance to perform the audit for us – or have AI add our accessibility calls to each new page/view, thereby eliminating the need to perform any kind of audit at all.

What’s Next

We plan to continue partnering with the internal accessibility team at Slack to design a small blocking test suite. These tests will be dedicated to the flows of core features within Slack, with a focus on keyboard navigation.

We’d also like to explore AI-driven approaches to the post-processing of accessibility test results and look into the option of having AI assistants audit our suites to determine the placement of our accessibility checks, further reducing the manual effort for developers.

Closing Thoughts

We had to make some unexpected trade-offs in this project, balancing the practical limitations of automated testing tools with the goal of reducing the burden on developers. While we couldn’t integrate accessibility checks completely into our frontend frameworks, we made significant strides towards that goal. We simplified the process for developers to add accessibility checks, ensured test results were easy to interpret, provided clear documentation, and streamlined triage through Slack workflows. In the end, we were able to add test coverage for accessibility in the Slack product, ensuring that our customers that require accessibility features have a consistent experience.

Our automated Axe checks have reduced our reliance on manual testing and now compliment other essential forms of testing—like manual testing and usability studies. At the moment, developers need to manually add checks, but we’ve laid the groundwork to make the process as straightforward as possible with the possibility for AI-driven creation of accessibility tests.

Roadblocks like framework complexity or setup difficulties shouldn’t discourage you from pursuing automation as part of a broader accessibility strategy. Even when it’s not feasible to hide the automated checks completely behind the scenes of the framework, there are ways to make the work impactful by focusing on the developer experience. This project has not only strengthened our overall accessibility testing approach, it’s also reinforced the culture of accessibility that has always been central to Slack. We hope it inspires others to look more closely at how automated accessibility might fit into your testing strategy, even if it requires navigating a few technical hurdles along the way.

Thank you to everyone who spent significant time on the editing and revision of this blog post – Courtney Anderson-Clark, Lucy Cheng, Miriam Holland and Sergii Gorbachov.

And a massive thank you to the Accessibility Team at Slack, Chanan Walia, Yura Zenevich, Chris Xu and Hye Jung Choi, for your help with everything related to this project, including editing this blog post!

Keep your files stored safely and securely with the SanDisk 2TB Extreme Portable SSD. With over 69,505 ratings and an impressive 4.6 out of 5 stars, this product has been purchased over 8K+ times in the past month. At only $129.99, this Amazon’s Choice product is a must-have for secure file storage.

Help keep private content private with the included password protection featuring 256-bit AES hardware encryption. Order now for just $129.99 on Amazon!

Support Techcratic

If you find value in Techcratic’s insights and articles, consider supporting us with Bitcoin. Your support helps me, as a solo operator, continue delivering high-quality content while managing all the technical aspects, from server maintenance to blog writing, future updates, and improvements. Support Innovation! Thank you.

Bitcoin Address:

bc1qlszw7elx2qahjwvaryh0tkgg8y68enw30gpvge

Please verify this address before sending funds.

Bitcoin QR Code

Simply scan the QR code below to support Techcratic.

Please read the Privacy and Security Disclaimer on how Techcratic handles your support.

Disclaimer: As an Amazon Associate, Techcratic may earn from qualifying purchases.