Kanwal Mehreen

2024-12-26 14:10:00

www.kdnuggets.com

Image by Author

Image captioning is a famous multimodal task that combines computer vision and natural language processing. The research topic has undergone considerable study over the years, and the models available today are substantially strong enough to handle a large variety of cases.

In this article, we will explore the use of Hugging Face’s transformer library to utilize the latest sequence-to-sequence models using a Vision Transformer encoder and a GPT-based decoder. We will see how HuggingFace makes it simple to use openly available models to perform image captioning.

Model Selection and Architecture

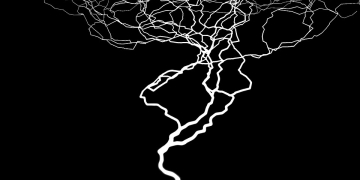

We use the ViT-GPT2-image-captioning pre-trained model by nlpconnect available on HuggingFace. Image captioning takes an image as an input and outputs a textual description of the image. For this task, we use a multi-modal model divided into two parts; an encoder and a decoder. The encoder takes the raw image pixels as input and uses a neural network to transform them into a 1-dimensional compressed latent representation. In the case of the chosen model, the encoder is based on the recent Vision Transformer (ViT) model, which applies the state-of-the-art transformer architecture to image patches. The encoder input is then passed as an input to a language model called the decoder. The decoder, in our case GPT-2, executes in an auto-regressive manner generating one output token at a time. When the model is trained end-to-end on an image-description dataset, we get an image captioning model that generates tokens to describe the image.

Setup and Inference

We first set up a clean Python environment and install all required packages to run the model. In our case, we just need the HuggingFace transformer library that runs on a PyTorch backend. Run the below commands for a fresh install:

python -m venv venv

source venv/bin/activate

pip install transformers torch Pillow

From the transformers package, we need to import the VisionEncoderDecoderModel, ViTImageProcessor, and the AutoTokenizer.

The VisionEncoderDecoderModel provides an implementation to load and execute a sequence-to-sequence model in HuggingFace. It allows to easily load and generate tokens using built-in functions. The ViTImageProcessor resizes, rescales, and normalizes the raw image pixels to preprocess it for the ViT Encoder. The AutoTokenizer will be used at the end to convert the generated token IDs into human-readable strings.

from transformers import VisionEncoderDecoderModel, ViTImageProcessor, AutoTokenizer

import torch

from PIL import Image

We can now load the open-source model in Python. We load all three models from the pre-trained nlpconnect model. It is trained end-to-end for the image captioning task and performs better due to end-to-end training. Nonetheless, HuggingFace provides functionality to load separate encoder and decoder models. Note, that the tokenizer should be supported by the decoder used, as the generated token IDs must match for correct decoding.

MODEL_ID = "nlpconnect/vit-gpt2-image-captioning"

model = VisionEncoderDecoderModel.from_pretrained(MODEL_ID)

feature_extractor = ViTImageProcessor.from_pretrained(MODEL_ID)

tokenizer = AutoTokenizer.from_pretrained(MODEL_ID)

Using the above models, we can generate captions for any image using a simple function defined as follows:

def generate_caption(img_path: str):

i_img = Image.open(img_path)

pixel_values = feature_extractor(images=i_img, return_tensors="pt").pixel_values

output_ids = model.generate(pixel_values)

response = tokenizer.decode(output_ids[0], skip_special_tokens=True)

return response.strip()

The function takes a local image path and uses the Pillow library to load an image. First, we need to process the image and get the raw pixels that can be passed to the ViT Encoder. The feature extractor resizes the image and normalizes the pixel values returning image pixels of size 224 by 224. This is the standard size for ViT-based architectures but you can change this based on your model.

The image pixels are then passed to the image captioning model that automatically applies the encoder-decoder model to output a list of generated token IDs. We use the tokenizer to decode the integer IDs to their corresponding words to get the generated image caption.

Call the above function on any image to test it out!

IMG_PATH="PATH_TO_IMG_FILE"

response = generate_caption(IMG_PATH)

A sample output is shown below:

Generated Caption: a large elephant standing on top of a lush green field

Conclusion

In this article, we explored the basic use of HuggingFace for image captioning tasks. The transformers library provides flexibility and abstractions in the above process and there is a large database of publically available models. You can tweak the process in multiple ways and apply the same pipeline to various models to see what suits you best.

Feel free to try any model and architecture as new models are pushed every day and you may find better models each day!

Kanwal Mehreen Kanwal is a machine learning engineer and a technical writer with a profound passion for data science and the intersection of AI with medicine. She co-authored the ebook “Maximizing Productivity with ChatGPT”. As a Google Generation Scholar 2022 for APAC, she champions diversity and academic excellence. She’s also recognized as a Teradata Diversity in Tech Scholar, Mitacs Globalink Research Scholar, and Harvard WeCode Scholar. Kanwal is an ardent advocate for change, having founded FEMCodes to empower women in STEM fields.

Transform your cleaning routine with the Shark AI Ultra Voice Control Robot Vacuum! This high-tech marvel boasts over 32,487 ratings, an impressive 4.2 out of 5 stars, and has been purchased over 900 times in the past month. Perfect for keeping your home spotless with minimal effort, this vacuum is now available for the unbeatable price of $349.99!

Don’t miss out on this limited-time offer. Order now and let Shark AI do the work for you!

Support Techcratic

If you find value in Techcratic’s insights and articles, consider supporting us with Bitcoin. Your support helps me, as a solo operator, continue delivering high-quality content while managing all the technical aspects, from server maintenance to blog writing, future updates, and improvements. Support Innovation! Thank you.

Bitcoin Address:

bc1qlszw7elx2qahjwvaryh0tkgg8y68enw30gpvge

Please verify this address before sending funds.

Bitcoin QR Code

Simply scan the QR code below to support Techcratic.

Please read the Privacy and Security Disclaimer on how Techcratic handles your support.

Disclaimer: As an Amazon Associate, Techcratic may earn from qualifying purchases.